NVIDIA DGX H100 Deep Learning Console 640GB SXM5

The NVIDIA DGX H100 Deep Learning Console (640GB SXM5) is a state-of-the-art AI supercomputing platform designed for large-scale AI training, generative models, and high-performance computing. Powered by eight NVIDIA H100 SXM5 GPUs with a combined 640GB of HBM3 memory and ultra-fast NVLink and NVSwitch interconnects, it delivers unmatched performance for training large language models, accelerating HPC workloads, and driving AI innovation at scale. Ideal for data centers, research labs, and enterprise AI infrastructure.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹559,999 ₹800,000

The NVIDIA DGX H100 Deep Learning Console is a flagship AI computing platform built for large-scale deep learning, AI model training, and high-performance computing (HPC). Powered by the groundbreaking Hopper architecture and outfitted with eight NVIDIA H100 SXM5 GPUs, this console delivers transformative performance for enterprises, research labs, and AI development environments.

With an aggregated 640GB of high-bandwidth HBM3 memory, next-gen NVLink interconnect, and PCIe Gen5 support, the DGX H100 console redefines what’s possible in AI infrastructure—accelerating everything from generative AI and LLM training to HPC simulations and scientific research.

Key Features:

- Hopper Architecture (H100 SXM5 GPUs)

-

- Leverages NVIDIA’s most advanced GPU design to deliver industry-leading compute for large language models (LLMs), deep neural networks, and generative AI workloads.

- 640GB Unified High-Bandwidth Memory

- Massive capacity for training trillion-parameter models.

- Exceptional throughput for large-scale simulations and data processing.

- Reduced bottlenecks with up to 3.35 TB/s bandwidth per GPU.

- Advanced Tensor Core Performance

- 4th-Gen Tensor Cores with FP8, FP16, BF16, and TF32 support.

- Transformer Engine optimized for LLMs, including GPT, BERT, and custom architectures.

- High-Speed GPU Interconnect

- NVLink 4.0 with 900 GB/s bandwidth per GPU pair.

- Unified memory access across GPUs for multi-GPU parallel training.

Applications:

- Artificial Intelligence & Deep Learning

-

- Training and inference of GPT, BERT, LLaMA, and other LLMs.

- Foundation models, generative AI, and computer vision.

- Real-time edge-to-cloud deployment of complex AI pipelines.

- High-Performance Computing (HPC)

-

- Scientific simulations in physics, genomics, and fluid dynamics.

- Climate modeling, energy systems analysis, and quantum computing.

- Accelerated computation for national labs and research institutions.

- Visualization & Digital Content Creation

-

- AI-powered content generation, ray tracing, and virtual production.

- CAD, simulation, and immersive visualization environments.

Why Choose NVIDIA DGX H100 Deep Learning Console?

- Purpose-Built AI Supercomputer

-

- Engineered from the ground up to support the world’s most demanding AI and HPC workloads.

- Unmatched Memory and Compute Density

-

- 640GB of unified memory and petaflop-class AI performance in a compact, scalable system.

- Seamless Multi-GPU Scalability

-

- NVLink and NVSwitch create a high-bandwidth mesh for synchronized parallel processing.

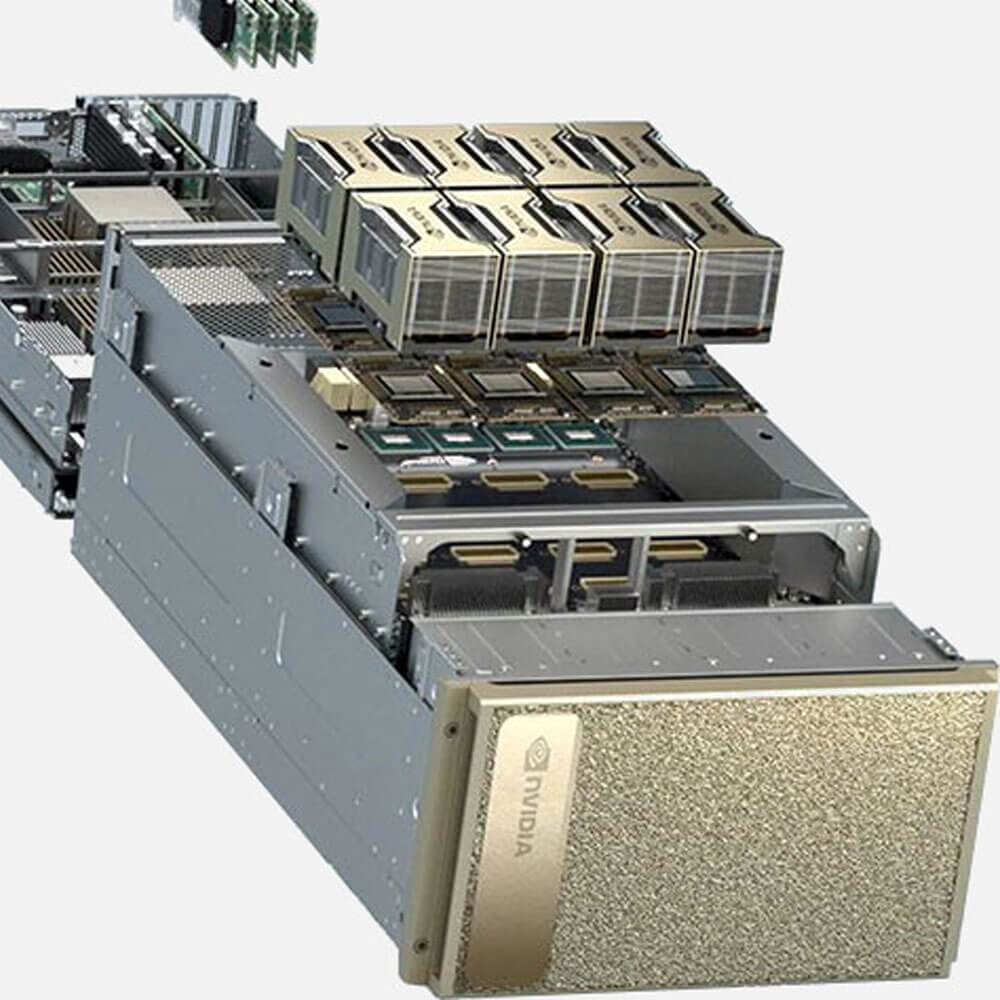

| GPU Architecture | NVIDIA Hopper |

| GPU Count | 8× NVIDIA H100 SXM5 GPUs |

| GPU Memory | 640GB HBM3 total (80GB per GPU) |

| Tensor Core Performance | Up to 32 PFLOPS AI |

| Interconnect | NVLink 4.0 + NVSwitch (900 GB/s GPU-GPU bandwidth) |

| System Interconnect | PCIe Gen5 |

| System Memory | 2TB DDR5 RAM |

| Storage | 30TB NVMe SSD (expandable) |

| Power Consumption | ~10kW |

| Form Factor | Data Center Rackmount (6U) |