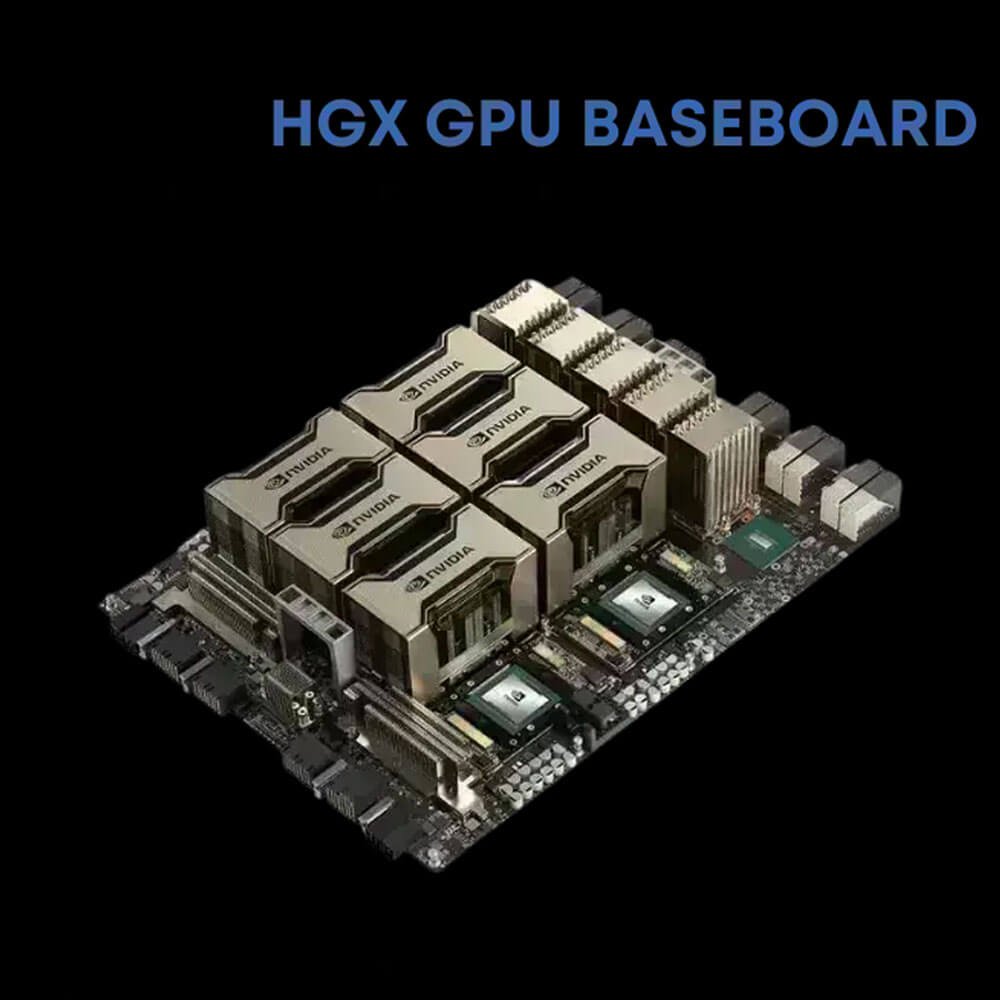

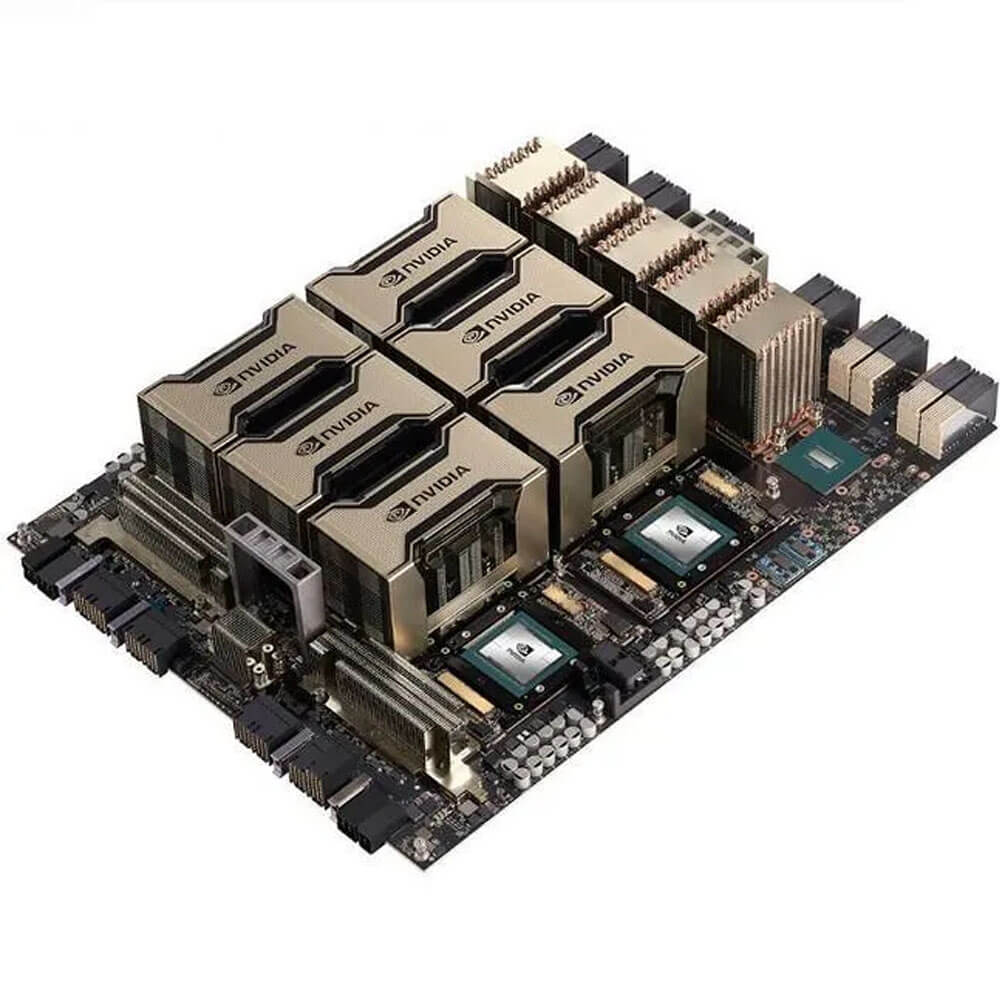

NVIDIA HGX A100-8 GPU Baseboard 8 x A100 SXM4 40GB HBM2

The NVIDIA HGX A100-8 is a high-performance computing baseboard featuring 8x A100 SXM4 GPUs with 40GB HBM2 memory each, delivering unmatched acceleration for AI, HPC, and data analytics workloads. Designed for scalability and maximum throughput, it offers NVLink and NVSwitch interconnects for ultra-fast GPU-to-GPU communication, powering the most demanding enterprise and research environments.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹559,999 ₹800,000

The NVIDIA HGX A100 8-GPU Baseboard is a high-performance, enterprise-grade platform designed for the most demanding AI, machine learning, and high-performance computing (HPC) workloads. Featuring eight NVIDIA A100 Tensor Core GPUs (SXM4 form factor) with 40GB of HBM2 memory each, this system delivers a combined 320GB of high-bandwidth memory, optimized for seamless scalability and exceptional throughput.

The HGX A100 baseboard provides the foundation for AI supercomputers, enabling massive parallel processing, model training at scale, real-time inference, and advanced scientific simulations—all with superior energy efficiency and compute density

Key Features:

- Powered by 8× NVIDIA A100 GPUs (SXM4)

-

- Built on the Ampere architecture, each A100 delivers industry-leading performance for both AI training and inference.

- 3rd Gen Tensor Cores support mixed-precision computing for unmatched speed and versatility.

- Massive Unified Memory Pool

- 320GB Total HBM2: 8 GPUs × 40GB HBM2, with high-bandwidth memory access for extreme AI model and dataset capacity.

- Enables multi-instance GPU (MIG) for optimal workload partitioning and resource efficiency.

- High-Speed GPU Interconnect

- NVLink 3.0 and NVSwitch: Up to 600 GB/s GPU-to-GPU communication bandwidth.

- Supports large model training across all 8 GPUs without PCIe bottlenecks.

- Optimized for Modern Workloads

- AI model training (LLMs, CV, NLP).

- Deep learning inference at scale.

- Scientific computing and simulations.

- Virtualization and cloud-native AI services.

Applications:

- Artificial Intelligence & Machine Learning

-

- Train and deploy large transformer-based models (e.g., GPT, BERT), computer vision systems, and generative AI workloads.

- High-Performance Computing (HPC)

-

- Accelerate physics, fluid dynamics, genomics, molecular modeling, and weather simulations with precision and speed.

- Data Analytics & Visualization

-

- Process and analyze massive datasets in real time; support high-fidelity rendering and digital twin visualization.

Why Choose NVIDIA HGX A100 (8×40GB)?

- Enterprise-Grade Performance

-

- Unifies AI, HPC, and analytics with the raw power of 8 GPUs working as one high-bandwidth computing unit.

- Scalable AI Supercomputing

-

- Integrated NVSwitch and NVLink provide a single unified GPU memory pool for maximum parallelism.

- Flexible for Multi-Workload Environments

-

- Supports MIG, containerized workloads, and virtualized GPU instances in enterprise or academic clusters.

| GPU Configuration | 8 × NVIDIA A100 Tensor Core GPUs (SXM4) |

| GPU Architecture | NVIDIA Ampere |

| Memory per GPU | 40GB HBM2 |

| Total Memory | 320GB HBM2 |

| Tensor Cores | 3rd Generation with mixed-precision support |

| Multi-Instance GPU (MIG) | Supported |

| GPU Interconnect | NVLink 3.0 + NVSwitch |

| Inter-GPU Bandwidth | Up to 600 GB/s |