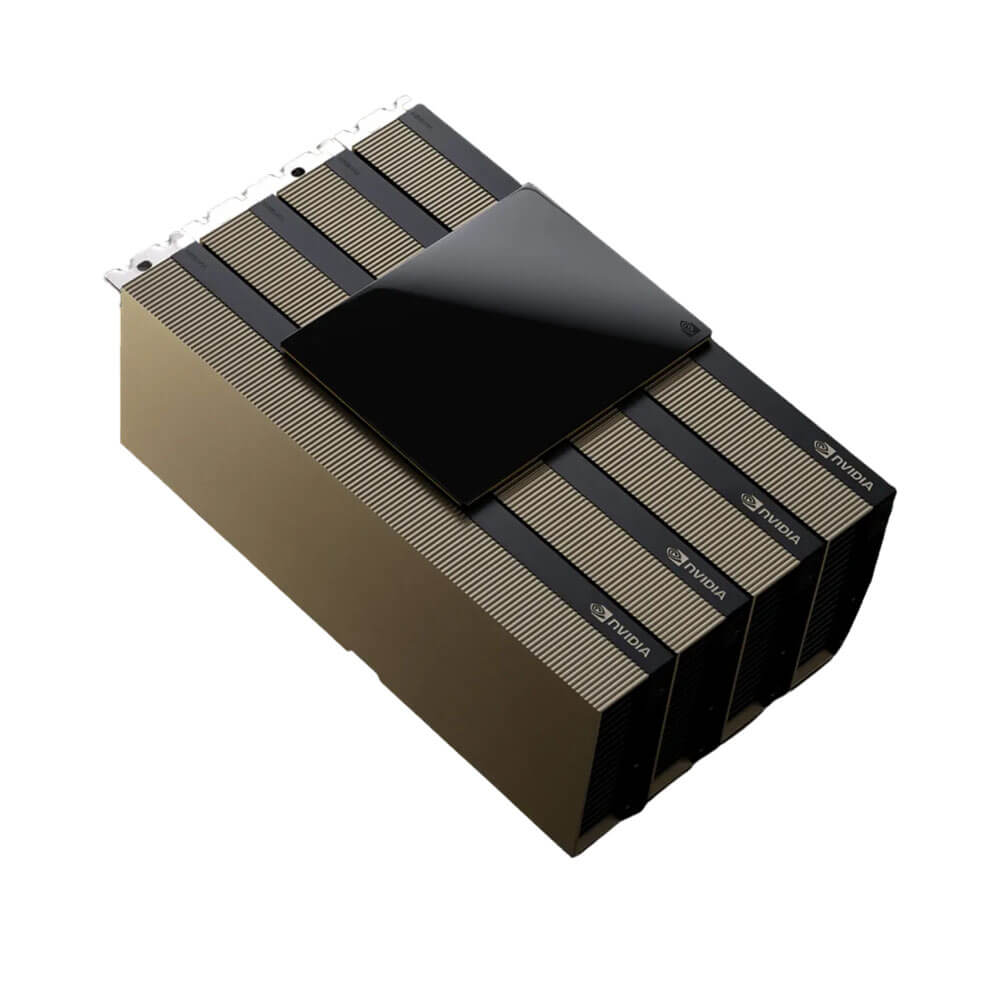

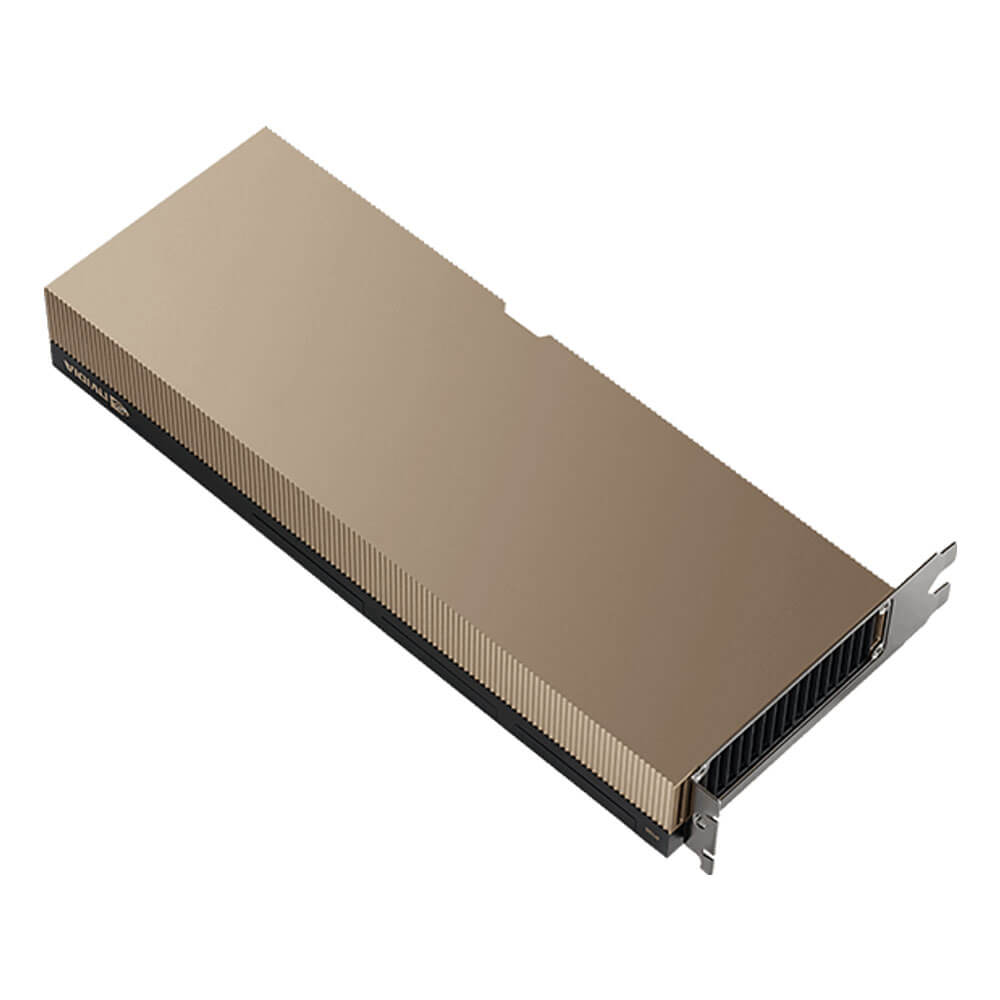

NVIDIA Tesla H200 NVL PCI-E 141GB

NVIDIA Tesla H200 NVL PCIe Card are compute-optimized GPU built on the NVIDIA Hopper architecture

- NVIDIA Hopper GPU architecture

- Compute-optimized GPU

- 14,592 NVIDIA® CUDA® Cores

- 456 NVIDIA® Tensor Cores

- 141GB HBM3 memory with ECC

- Up to 4.8 TB/s memory bandwidth

- Max. power consumption: 600W

- Graphics bus: PCI-E 5.0 x16

- Thermal solution: Passive

NVIDIA H200 NVL Graphic Card – Passive – PCIe – 141 GB – 900-21010-0040-000

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹659,999 ₹900,000

The NVIDIA Tesla H200 NVL is built upon NVIDIA’s Hopper architecture, representing the next generation of GPU innovation optimized for AI and high-performance computing (HPC). It distinguishes itself by being the first GPU to integrate 141 GB of HBM3e memory—an industry-leading feat that delivers 4.8 terabytes per second (TB/s) of memory bandwidth. This massive memory pool pushes the capabilities of generative AI models, large language models (LLMs), and scientific workloads to new heights by reducing memory bottlenecks that restrict performance

With those specifications—it almost doubles the capacity of its predecessor, the H100—and boosts memory bandwidth by approximately 1.4×, the H200 NVL offers a significant advancement in data throughput and scaling.

NVIDIA Tesla H200, Tesla H200 NVL, NVIDIA Tesla H200 NVL PCI-E, Tesla H200 PCI Express, NVIDIA Tesla H200 141GB, H200 NVL GPU, Tesla H200 Data Center GPU, NVIDIA AI GPU, High Memory AI GPU, PCI-E AI Accelerator, NVIDIA HPC GPU, Deep Learning GPU, Data Center Graphics Card

| Model | NVIDIA Tesla H200 NVL PCI-E |

| GPU Architecture | NVIDIA Hopper |

| GPU Memory | 141 GB HBM3e |

| Memory Type | HBM3e (High Bandwidth Memory) |

| Memory Bandwidth | Up to 4.8 TB/s |

| Interface | PCI Express Gen5 |

| NVLink Support | Yes |

| CUDA Cores | 14,592 (per GPU) |

| Tensor Cores | 456 (per GPU) |

| Form Factor | Dual-Slot |

| Thermal Solution | Passive Cooling |

| Power Consumption | ~700W (per GPU) |

| Target Applications | AI Training, HPC, Deep Learning, Data Analytics |