The HP ZGX Nano AI Station 128GB is a compact, high-performance AI workstation powered by the NVIDIA GB10 Grace Blackwell Superchip, delivering ~1,000 TOPS FP4 AI performance, 128 GB unified memory, and scalable support for massive AI models—ideal for developers, researchers, and data scientists.

Uncategorized

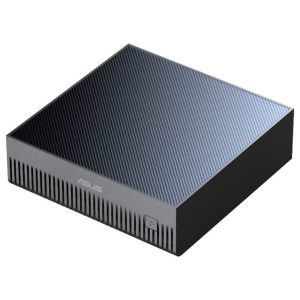

HP ZGX Nano AI Station128GB

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹659,999 ₹900,000

| Product Name | HP ZGX Nano AI Station |

| Memory | 128 GB |

| Processor / SoC | NVIDIA GB10 Grace Blackwell Superchip – 20-core ARM CPU + Blackwell GPU (FP4 AI acceleration) |

| AI Performance | ~1,000 TOPS (FP4 precision) for AI inference |

| Model Support | AI models up to ~200 billion parameters |

| Storage Options | 1 TB or 4 TB NVMe M.2 SSD (self-encrypting, ~35 GB reserved for recovery) |

| Software | HP AI Studio, HP AI Creation Center (local AI workflow tools) |

| Connectivity | NVIDIA ConnectX high-speed networking |

| Scalability | Dual-unit connection via NVIDIA ConnectX for ~400B-parameter model workloads |