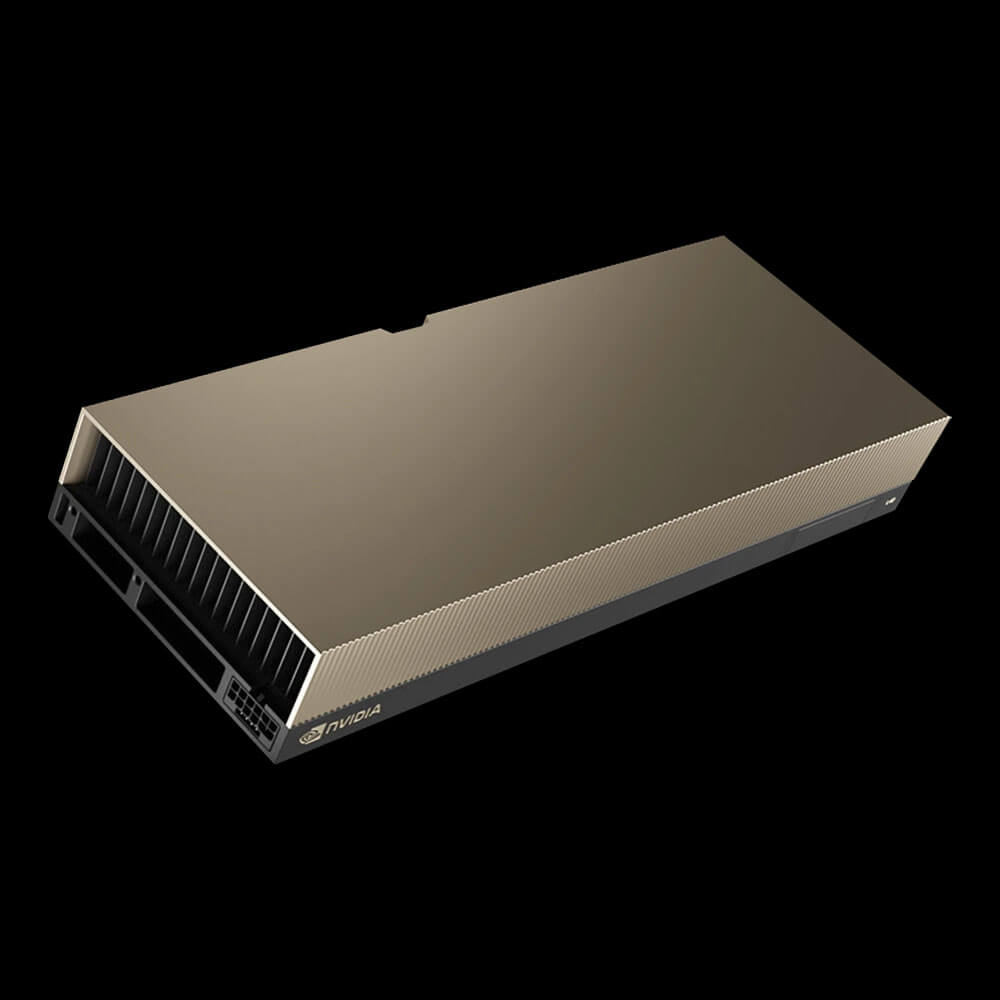

NVIDIA A10 PCIe Graphics Card 24 GB

The NVIDIA A10 PCIe Graphics Card with 24GB memory delivers powerful performance for AI, graphics, and virtualized workloads. Built on the NVIDIA Ampere architecture, it features advanced Tensor and RT Cores for AI acceleration and real-time ray tracing. Ideal for data centers and enterprise applications, the A10 PCIe ensures exceptional scalability, efficiency, and reliability for demanding professional tasks.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹314,000 ₹498,000

The A10 PCIe is a professional graphics card by NVIDIA, launched on April 12th, 2021. Built on the 8 nm process, and based on the GA102 graphics processor, in its GA102-890-A1 variant, the card supports DirectX 12 Ultimate. The GA102 graphics processor is a large chip with a die area of 628 mm² and 28,300 million transistors. Unlike the fully unlocked GeForce RTX 3090 Ti, which uses the same GPU but has all 10752 shaders enabled, NVIDIA has disabled some shading units on the A10 PCIe to reach the product’s target shader count. It features 9216 shading units, 288 texture mapping units, and 96 ROPs. Also included are 288 tensor cores which help improve the speed of machine learning applications. The card also has 72 raytracing acceleration cores. NVIDIA has paired 24 GB GDDR6 memory with the A10 PCIe, which are connected using a 384-bit memory interface. The GPU is operating at a frequency of 885 MHz, which can be boosted up to 1695 MHz, memory is running at 1563 MHz (12.5 Gbps effective).

Being a single-slot card, the NVIDIA A10 PCIe draws power from 1x 8-pin power connector, with power draw rated at 150 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. A10 PCIe is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, 111 mm in width, and features a single-slot cooling solution.

Key Features:

- Ampere Architecture

- CUDA Cores

-

- The A10’s Ampere-based SM (Streaming Multiprocessor) design packs thousands of CUDA Cores, offering impressive parallel compute performance for data-intensive tasks such as AI workloads, complex 3D rendering, and HPC simulations.

- Second-Generation RT Cores

-

- Delivers up to 2× the throughput of previous-generation ray-tracing cores, enabling near-real-time lighting, shadows, and reflections in advanced visualization or design workflows.

- Third-Generation Tensor Cores

-

- Accelerates AI and deep learning operations (FP16, BF16, TF32, INT8), boosting both training and inference speeds, while preserving high model accuracy.

- 24GB GDDR6 Memory

- High-Capacity VRAM

- With 24GB of GDDR6, the A10 accommodates large AI models, multi-4K video streams, or extensive HPC datasets, reducing out-of-memory issues.

- ECC Support (Varies by Model)

- Some server/system variants may feature ECC memory to ensure data integrity in mission-critical applications.

- Robust AI and HPC Acceleration

- Mixed-Precision Computing

- Supports FP16, BF16, INT8, and TF32 for AI tasks, enabling faster deep learning training and inference without compromising accuracy.

- NVIDIA AI Software Ecosystem

- Integrates seamlessly with CUDA-X AI libraries, frameworks (e.g., TensorFlow, PyTorch), and HPC containers from NVIDIA NGC, simplifying development and deployment.

- High-Performance Computing (HPC) Capabilities

- FP64 for Scientific Applications

- Delivers strong double-precision (FP64) throughput, essential for weather modeling, molecular dynamics, and other HPC tasks.

- Multi-GPU Scalability

- Some A-series GPUs support NVLink for unified memory and near-linear performance scaling in HPC clusters. (Check official docs for A10 NVLink availability.)

- Professional Visualization

- Real-Time Ray Tracing

- Second-gen RT Cores accelerate real-time photorealistic rendering for design, architectural visualization, and advanced media production.

- Multi-Display Support

- Depending on the server or workstation form factor, the A10 may drive multiple high-resolution displays or run headless in a data center environment.

- Energy Efficiency and Data Center Integration

- PCIe 4.0 Interface

- Provides high-bandwidth communication with CPUs and other system components, reducing data transfer bottlenecks in HPC or AI tasks.

- Moderate TDP

- Operates at a power envelope typically within ~150–225W (model-dependent), balancing performance and efficiency for continuous workloads.

- Enterprise-Grade Drivers and Management

- Long Lifecycle & Security

- NVIDIA’s enterprise drivers receive security patches, reliability updates, and extended support essential for mission-critical operations.

- NVIDIA NGC

- Access HPC, AI, and data analytics containers that simplify environment setup, ensuring consistent performance across servers or cloud deployments.

Applications:

- Artificial Intelligence and Data Science

-

- Deep Learning Training & Inference: Speeds up large-scale neural network tasks, from language models to image recognition, leveraging Tensor Cores for mixed-precision acceleration.

- Data Analytics: GPU-accelerated frameworks process vast datasets interactively, unlocking real-time or near-real-time insights.

- High-Performance Computing (HPC)

-

- Scientific Simulations: HPC workloads in areas like climate modeling, computational fluid dynamics, and structural analysis benefit from the A10’s parallel architecture and robust memory.

- Research & Academic Labs: Delivers faster iteration for code, shorter simulation runs, and advanced HPC libraries for complex computations.

- Professional Visualization and Rendering

-

- Ray-Traced Rendering: Real-time or near-real-time rendering speeds up design reviews, product prototyping, and architectural walkthroughs with second-gen RT Cores.

- Immersive VR: Powers high-fidelity VR experiences in industrial design, simulation training, or location-based entertainment setups.

- Virtual Desktop Infrastructure (VDI)

-

- vGPU & Multi-User: Partition the A10’s GPU resources among multiple users, enabling GPU-accelerated remote desktops or design apps in a data center environment.

- Cloud-Hosted Workstations: Delivers professional 3D or engineering software to distributed teams via virtualization platforms.

- Media & Entertainment

-

- Video Editing & Transcoding: Handles 4K/8K streams, complex color grading, and GPU-accelerated effects in real time, improving creative pipeline efficiency.

- Visual Effects: Accelerates production of advanced VFX, 3D animations, and final compositing with AI-powered denoising or upscaling tools.

Why Choose the NVIDIA A10 PCIe Graphics Card 24 GB?

- Balanced Performance and Scalability

-

- Combines robust HPC/AI throughput and large memory with moderate TDP, supporting versatile enterprise workloads in standard server or workstation environments.

- Advanced Ray Tracing & AI Features

-

- Second-gen RT and third-gen Tensor Cores deliver leading-edge rendering and deep learning performance, bridging HPC, AI, and professional graphics in a single GPU.

- Ample 24GB Memory

-

- Facilitates large-scale scientific simulations, heavy AI models, multi-4K content streams, or professional 3D pipelines without memory constraints.

- Flexible Deployment

-

- A standard PCIe form factor ensures wide compatibility with existing data center racks, HPC nodes, or workstation chassis, simplifying upgrades and scaling.

- Enterprise Reliability & Lifecycle

-

- Certified drivers, extended support, and integration with NVIDIA’s HPC/AI ecosystem ensure stable, predictable performance for mission-critical tasks and long operational lifespans.

| Product Name | NVIDIA A10 PCIe |

| Manufacturer | NVIDIA |

| Memory | 24 GB GDDR6 |

| Memory Interface | 384-bit |

| Memory Bandwidth | 600 GB/s |

| Base Clock | 1,410 MHz |

| Boost Clock | 1,665 MHz |

| TDP (Thermal Design Power) | 300W |

| PCIe Interface | PCIe Gen 4.0 x16 |

| Power Connectors | 1x 8-pin |

| Max Digital Resolution | 7680 x 4320 (8K) |

| Power Requirements | 8-pin + 8-pin (300W total power consumption) |