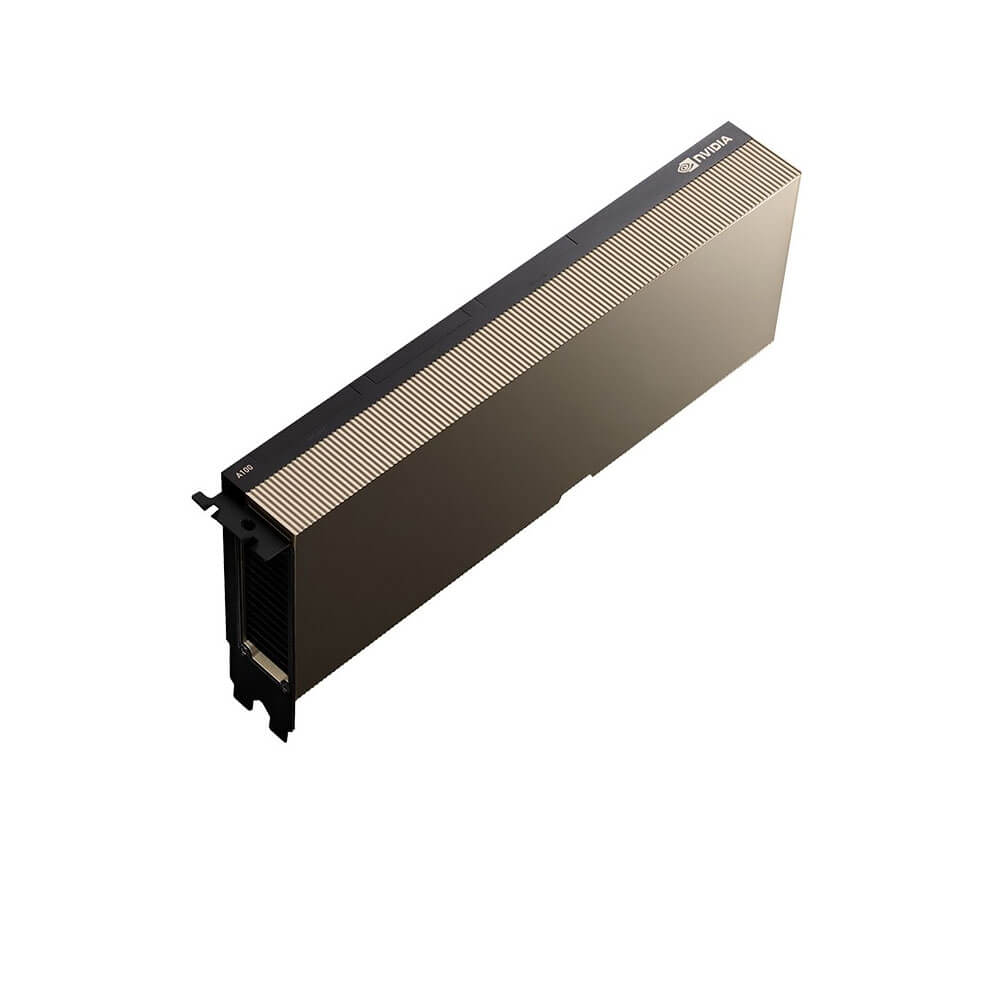

NVIDIA A100 PCIe Graphics Card 80 GB

The NVIDIA A100 PCIe Graphics Card with 80GB memory is a powerhouse for AI, HPC, and data analytics. Built on the NVIDIA Ampere architecture, it features advanced Tensor Cores and multi-instance GPU (MIG) technology, delivering exceptional performance for AI training, inference, and scientific simulations. Ideal for data centers, the A100 PCIe offers unmatched scalability, energy efficiency, and computational power.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹2,050,000 ₹2,800,000

The A100 PCIe 80 GB is a professional graphics card by NVIDIA, launched on June 28th, 2021. Built on the 7 nm process, and based on the GA100 graphics processor, the card does not support DirectX. Since A100 PCIe 80 GB does not support DirectX 11 or DirectX 12, it might not be able to run all the latest games. The GA100 graphics processor is a large chip with a die area of 826 mm² and 54,200 million transistors. It features 6912 shading units, 432 texture mapping units, and 160 ROPs. Also included are 432 tensor cores which help improve the speed of machine learning applications. NVIDIA has paired 80 GB HBM2e memory with the A100 PCIe 80 GB, which are connected using a 5120-bit memory interface. The GPU is operating at a frequency of 1065 MHz, which can be boosted up to 1410 MHz, memory is running at 1512 MHz.

Being a dual-slot card, the NVIDIA A100 PCIe 80 GB draws power from an 8-pin EPS power connector, with power draw rated at 300 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. A100 PCIe 80 GB is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, 111 mm in width, and features a dual-slot cooling solution.

Key Features:

- Ampere Architecture

- High-Core Parallelism

- The A100 architecture boasts tens of thousands of CUDA Cores, enabling high degrees of parallelism required for advanced HPC, AI training, and data analytics tasks.

- Second-Generation RT Cores

- Delivers hardware-accelerated real-time ray tracing for professional visualization, enabling near-instant lighting, shadow, and reflection calculations for advanced rendering or simulation.

- Third-Generation Tensor Cores

- Optimized for AI and machine learning, these Tensor Cores accelerate mixed-precision computations (FP16, BF16, TF32, INT8) needed for faster deep learning training and inference.

- 80GB HBM2e Memory

- High-Bandwidth Memory

- With 80GB of HBM2e, the A100 PCIe provides exceptional memory bandwidth—exceeding 1.5 TB/s in many configurations—critical for large neural network training sets and data-heavy HPC workloads.

- Scalable Capacity

- Allows for massive datasets, multi-4K media streams, or extensive 3D simulation data to be processed in-core, minimizing transfer bottlenecks.

- AI & HPC Acceleration

- Mixed-Precision Support

- Tensor Cores streamline calculations across FP16, BF16, TF32, and INT8, striking the best balance between compute speed and model accuracy.

- NVIDIA AI Software Ecosystem

- Seamlessly integrates with software like CUDA, cuDNN, TensorRT, and HPC libraries, simplifying model development, training, and deployment.

- Enterprise-Class Reliability & Management

- 24/7 Continuous Operation

- Engineered for uninterrupted heavy loads, ensuring stable performance for critical HPC simulations and AI training cycles.

- Stable Driver Releases

- Long lifecycle “enterprise” drivers, security patches, and validated software stacks offer minimal downtime in production.

- Scalable Multi-GPU Configurations

- NVLink Interconnect

- Some A100 PCIe models (and alternative form factors like SXM) allow multi-GPU bridging for shared memory pooling and near-linear performance scaling in HPC or AI clusters.

- Flexibility in Data Centers

- Standard PCIe Gen 4.0 interface ensures wide compatibility with existing server infrastructures while providing fast CPU–GPU bandwidth.

- Energy Efficiency & Advanced Cooling

- Optimized Thermal Design

- Typically passively cooled or integrated into servers with robust airflow, enabling stable, high throughput operation under prolonged load.

- Performance-per-Watt

- Ampere architecture improvements deliver more performance at the same or lower power levels compared to previous GPU generations, reducing TCO for large-scale installations.

Applications:

- High-Performance Computing (HPC)

-

- Scientific Simulations: Speeds up simulations in climate science, molecular dynamics, astrophysics, or CFD, reducing iteration times and enabling deeper explorations.

- Research Labs: HPC environments at universities, government, or private labs rely on A100 GPUs for cutting-edge scientific endeavors.

- Artificial Intelligence & Machine Learning

-

- Deep Learning Training: Rapidly trains complex neural networks (transformers, CNNs, RNNs) in significantly reduced cycles, crucial for advanced AI research and production systems.

- Inference: Deploys large AI models at scale with minimal latency, vital for real-time services like speech recognition, recommendation engines, or image classification.

- Data Analytics & Big Data

-

- GPU-Accelerated Database Operations: Tools like RAPIDS or BlazingSQL leverage GPU compute for interactive big data queries, reducing CPU overhead.

- ETL & Streaming: Parallel GPU operations accelerate transformations of massive datasets, timely gleaning insights from streaming data.

- Professional Visualization & Rendering

-

- Ray-Traced Rendering: Second-gen RT Cores offer near-instant photorealistic visuals for design, architectural previews, or media production.

- Large-Scale 3D Projects: 80GB of memory accommodates huge 3D scenes, multiple 4K/8K video streams, or advanced volumetric data.

- Virtualization & Cloud

-

- Multi-User GPU: With vGPU or container technology, data centers can share A100 compute among multiple workloads or user sessions, increasing GPU utilization.

- Cloud AI Services: HPC and AI cloud service providers can integrate A100 for flexible, on-demand GPU acceleration to enterprise clients.

Why Choose the NVIDIA A100 PCIe Graphics Card 80 GB?

- Unparalleled Compute & Memory

-

- The Ampere-based design plus 80GB HBM2e memory deliver top-tier performance for HPC, AI training, and big-data analytics, enabling next-level computations.

- Versatile Data Center Integration

-

- As a PCIe card, it easily fits into standard server racks, HPC nodes, or cloud infrastructures, scaling with multi-GPU configurations via NVLink when needed.

- Enhanced AI & HPC Software Ecosystem

-

- Full compatibility with NVIDIA’s extensive HPC/AI libraries, containers (NGC), and enterprise drivers ensure smooth deployment, updates, and performance tunings.

- Energy Efficiency & Reliability

-

- Ampere optimizations reduce power consumption per TFLOP, while enterprise driver support fosters stable and predictable performance over the GPU’s lifecycle.

- Future-Proof Architecture

-

- Second-gen RT Cores, third-gen Tensor Cores, and robust double-precision HPC capabilities keep the A100 relevant for upcoming data center applications and workloads.

| Product Name | NVIDIA A100 PCIe |

| Manufacturer | NVIDIA |

| Memory | 80 GB HBM2 |

| Memory Bandwidth | 1555 GB/s |

| Memory Bus | 5120-bit |

| Base Clock | 1410 MHz |

| Boost Clock | 1410 MHz |

| PCIe Interface | PCIe Gen 4.0 x16 |

| TDP (Thermal Design Power) | 400 W |

| Power Connectors | 8-pin + 6-pin connectors |

| Display Outputs | None (Headless server card) |

| Dimensions | 267 mm x 111.15 mm x 40.4 mm |