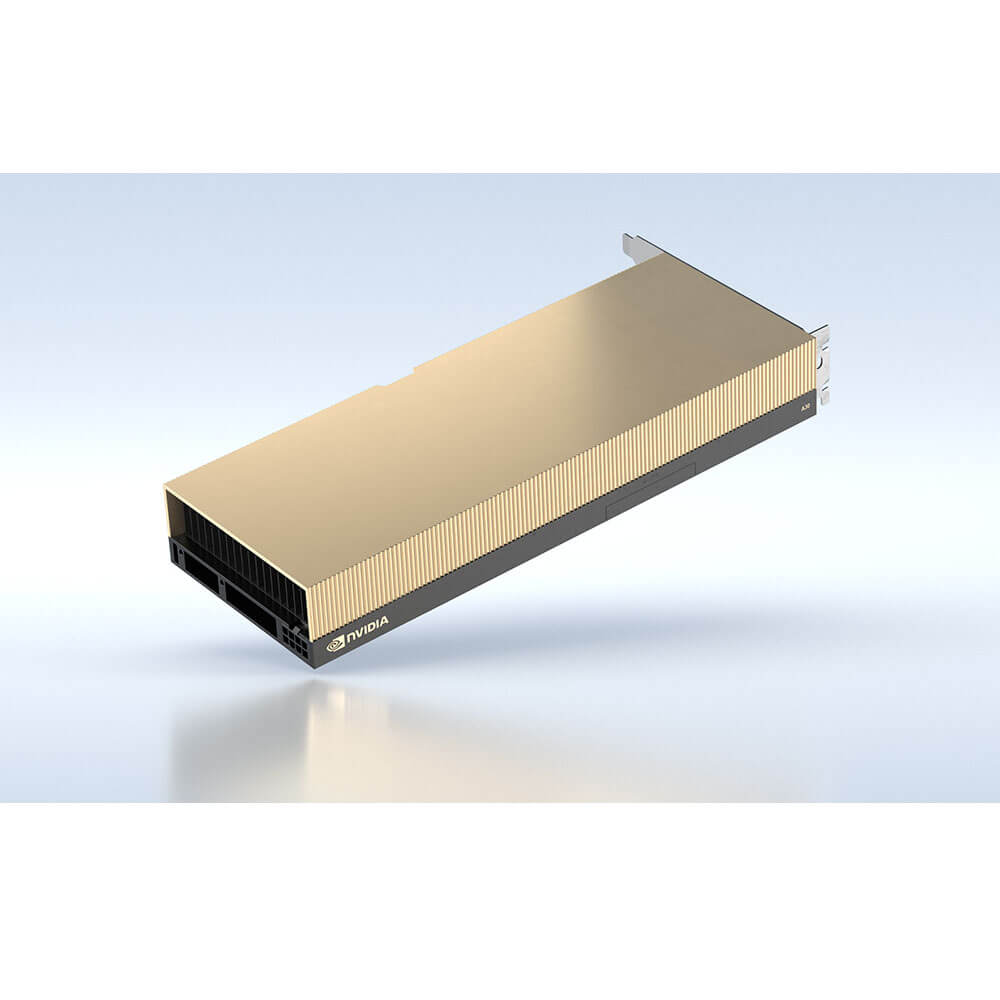

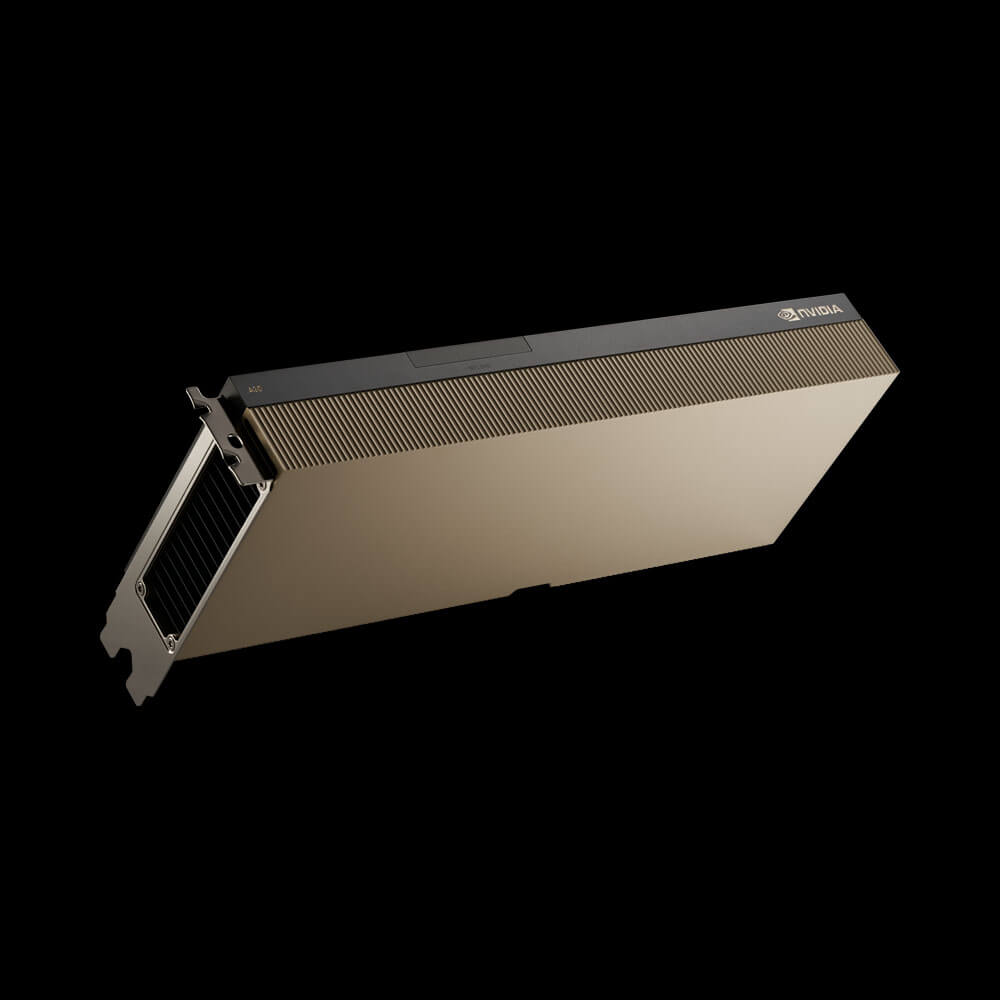

NVIDIA A30 PCIe Graphics Card 24 GB

The NVIDIA A30 PCIe Graphics Card with 24GB memory is engineered for AI, HPC, and data analytics workloads. Powered by the NVIDIA Ampere architecture, it features advanced Tensor Cores and multi-instance GPU (MIG) support, delivering high efficiency for AI training and inference. Ideal for data centers and enterprise applications, the A30 PCIe ensures exceptional performance, scalability, and energy efficiency.

NVIDIA PNY Tesla A30 24GB GRAPHICS ACCELERATOR A30 PCIE Retail SCB NVA30TCGPU-KIT

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹477,500 ₹755,000

The A30 PCIe is a professional graphics card by NVIDIA, launched on April 12th, 2021. Built on the 7 nm process, and based on the GA100 graphics processor, the card does not support DirectX. Since A30 PCIe does not support DirectX 11 or DirectX 12, it might not be able to run all the latest games. The GA100 graphics processor is a large chip with a die area of 826 mm² and 54,200 million transistors. Unlike the fully unlocked DRIVE A100 PROD, which uses the same GPU but has all 6912 shaders enabled, NVIDIA has disabled some shading units on the A30 PCIe to reach the product’s target shader count. It features 3584 shading units, 224 texture mapping units, and 96 ROPs. Also included are 224 tensor cores which help improve the speed of machine learning applications. NVIDIA has paired 24 GB HBM2e memory with the A30 PCIe, which are connected using a 3072-bit memory interface. The GPU is operating at a frequency of 930 MHz, which can be boosted up to 1440 MHz, memory is running at 1215 MHz.

Being a dual-slot card, the NVIDIA A30 PCIe draws power from an 8-pin EPS power connector, with power draw rated at 165 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. A30 PCIe is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, 112 mm in width, and features a dual-slot cooling solution.

Key Features:

- Ampere Architecture

- CUDA Cores

- The A30 GPU includes thousands of CUDA Cores (exact count varies by implementation), enabling parallelized acceleration for compute-heavy tasks, from AI model training to large-scale simulations and data analytics.

- Second-Generation RT Cores

- Real-time ray tracing is enhanced by these dedicated cores, providing advanced visual realism and interactive rendering. Professionals in design, architecture, and media production benefit from faster, more accurate previews and final outputs.

- Third-Generation Tensor Cores

- Delivers expanded support for mixed-precision computations (FP16, BF16, TF32, etc.), significantly boosting deep learning model throughput and accelerating AI-driven applications like natural language processing, computer vision, and recommendation systems.

- 24GB ECC GDDR6 Memory

- High-Capacity VRAM

- With 24GB of GDDR6 memory, the A30 handles massive datasets, large neural network models, and complex 3D scenes without running into memory bottlenecks.

- Error-Correcting Code (ECC)

- ECC memory ensures data integrity and reliability—crucial for scientific computing, financial modeling, and AI training pipelines where accuracy is paramount.

- Diverse Precision & AI Acceleration

- Mixed-Precision Computing

- Offers faster training and inference for AI tasks by exploiting flexible precision modes like FP16, BF16, and INT8. This approach reduces calculation times while maintaining model accuracy.

- NVIDIA Software Ecosystem

- Compatible with NVIDIA’s HPC, AI, and ML libraries—such as CUDA-X AI, cuBLAS, cuDNN, TensorRT—for streamlined development, scaling, and deployment of advanced data-driven solutions.

- High-Performance Computing (HPC) Integration

- Robust Double Precision

- For scientific HPC workloads, the A30 supports strong FP64 throughput, enabling accurate simulations in fluid dynamics, molecular modeling, and physics research.

- Scalable Multi-GPU Configurations

- Some A-series GPUs provide NVLink support, allowing memory sharing and aggregated performance across multiple A30 cards for HPC clusters (check exact model for NVLink availability).

- Energy Efficiency & Data Center Adaptability

- Optimized Power Usage

- Typically operates around 165–200W TDP, balancing formidable performance with power efficiency—vital for data center deployments with stringent power and cooling constraints.

- PCIe 4.0 Interface

- Delivers high-bandwidth communication with CPUs and other system components, ensuring minimal data transfer bottlenecks.

- Enterprise-Ready Reliability

- 24/7 Continuous Operation

- Engineered for round-the-clock workloads, essential for HPC labs, enterprise data centers, and large-scale AI services.

- Long Lifecycle & Driver Support

- NVIDIA’s professional drivers ensure stability, security patches, and consistent performance across operating system and software updates.

- Professional Visualization Capabilities

- Advanced Ray Tracing

- Empower 3D artists, architects, and engineers to produce photorealistic renders and interactive VR walkthroughs in real time.

- NVIDIA vGPU & Virtualization

- Some A-series GPUs (including the A30) support virtual GPU partitions and remote desktop sessions, enabling multiple users to share a single GPU resource effectively.

Applications:

- Artificial Intelligence & Machine Learning

- Deep Learning Training & Inference: Tensor Cores accelerate neural network computations for tasks like image classification, NLP, or recommendation systems.

- Data Analytics: Parallel processing shortens analysis times for big data, predictive modeling, or real-time analytics pipelines.

- High-Performance Computing (HPC)

- Scientific Simulations: Handles compute-heavy workloads in weather forecasting, molecular modeling, or fluid dynamics with GPU-accelerated HPC frameworks.

- Research & Development: Universities, government labs, and private R&D rely on fast GPU-based computations to expedite discoveries.

- Professional Visualization

- Ray-Traced Rendering: Architects, product designers, and media producers benefit from real-time ray tracing for photorealistic previews and faster iteration cycles.

- Immersive VR & AR: Delivers advanced visualization experiences for design reviews, training simulations, or collaborative engineering tasks.

- Virtualization & Cloud Services

- Multi-User VDI: Virtual Desktop Infrastructure scenarios for engineering or creative professionals who need GPU-accelerated desktops on demand.

- Hybrid Cloud Deployments: Integrates into HPC clusters or cloud service offerings for flexible GPU resource allocation.

- Data Center & Enterprise AI

- ML Model Ops: Speeds up training, reduces inference latencies, and scales AI across large organizations or e-commerce platforms.

- Edge Computing: Deployed in remote sites or edge servers that need robust AI or HPC capabilities with efficient power use.

Why Choose the NVIDIA A30 PCIe Graphics Card 24 GB?

- Multi-Purpose Performance

- Blends HPC strength, AI acceleration, and professional visualization, covering an extensive range of enterprise use-cases.

- Ample 24GB GDDR6 ECC Memory

- Ensures reliability and capacity for big data, large AI models, and memory-hungry 3D scenes without frequent memory constraints.

- Energy Efficiency for Data Centers

- Operates at moderate TDP, reducing operational costs and cooling requirements—ideal for large-scale or continuously running deployments.

- NVIDIA Ecosystem Integration

- Enjoy support from the NVIDIA HPC/AI software stack, enterprise-grade drivers, and virtualization technologies, simplifying management and updates.

- Future-Proof Architecture

- Ampere-based design with second-gen RT Cores and third-gen Tensor Cores ensures readiness for emerging workloads and next-generation applications.

| Product Name | NVIDIA A30 PCIe |

| Manufacturer | NVIDIA |

| Base Clock Speed | 1,215 MHz |

| Boost Clock Speed | 1,410 MHz |

| Memory | 24 GB GDDR6 |

| Memory Bandwidth | 933 GB/s |

| Memory Interface | 384-bit |

| PCIe Interface | PCIe Gen 4.0 x16 |

| TDP (Thermal Design Power) | 165 W |

| Recommended Power Supply | Minimum 550W |

| Dimensions | 10.5 inches x 4.4 inches (267 mm x 112 mm) |