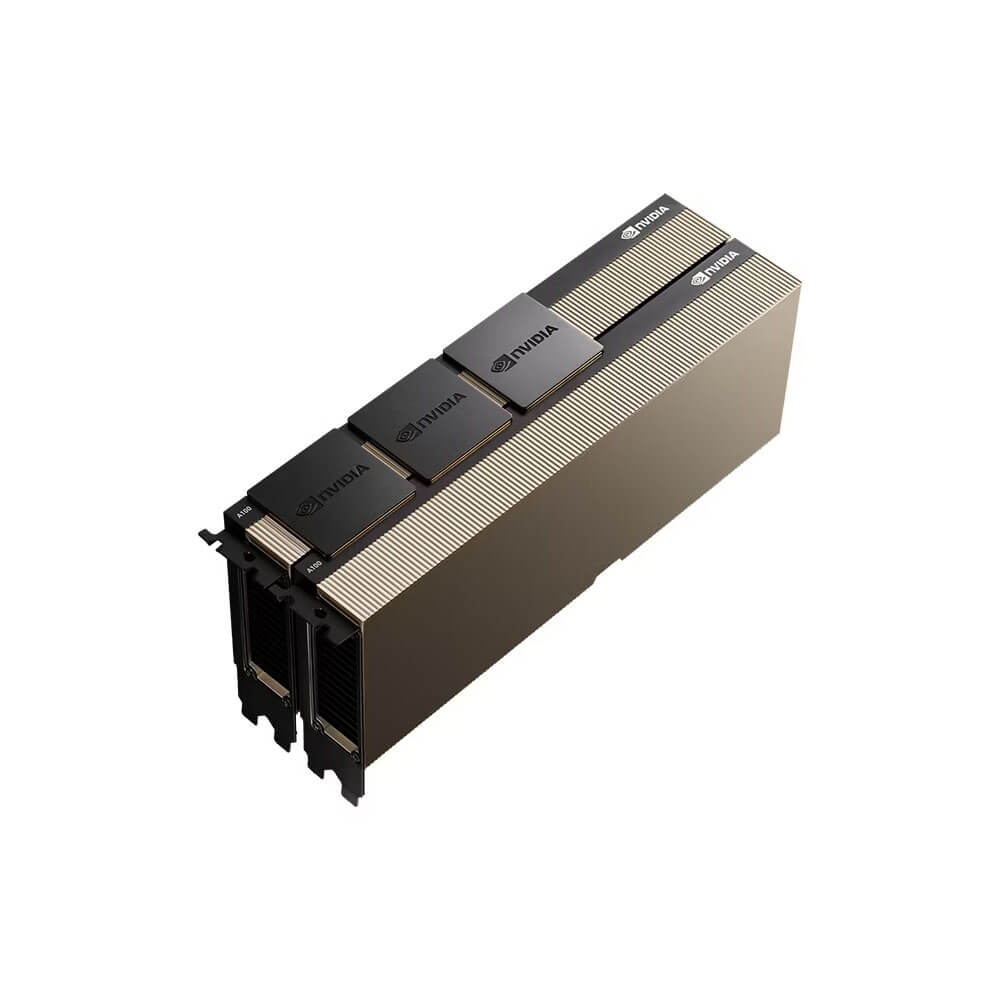

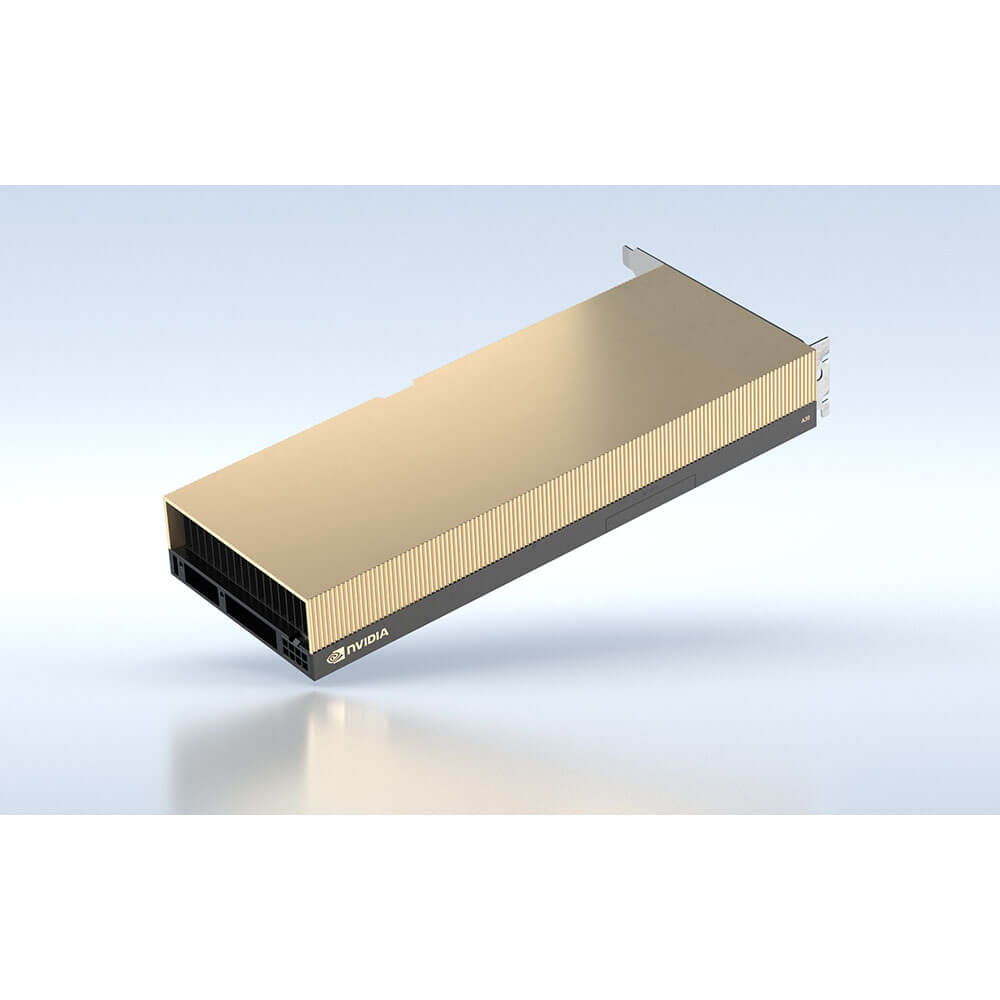

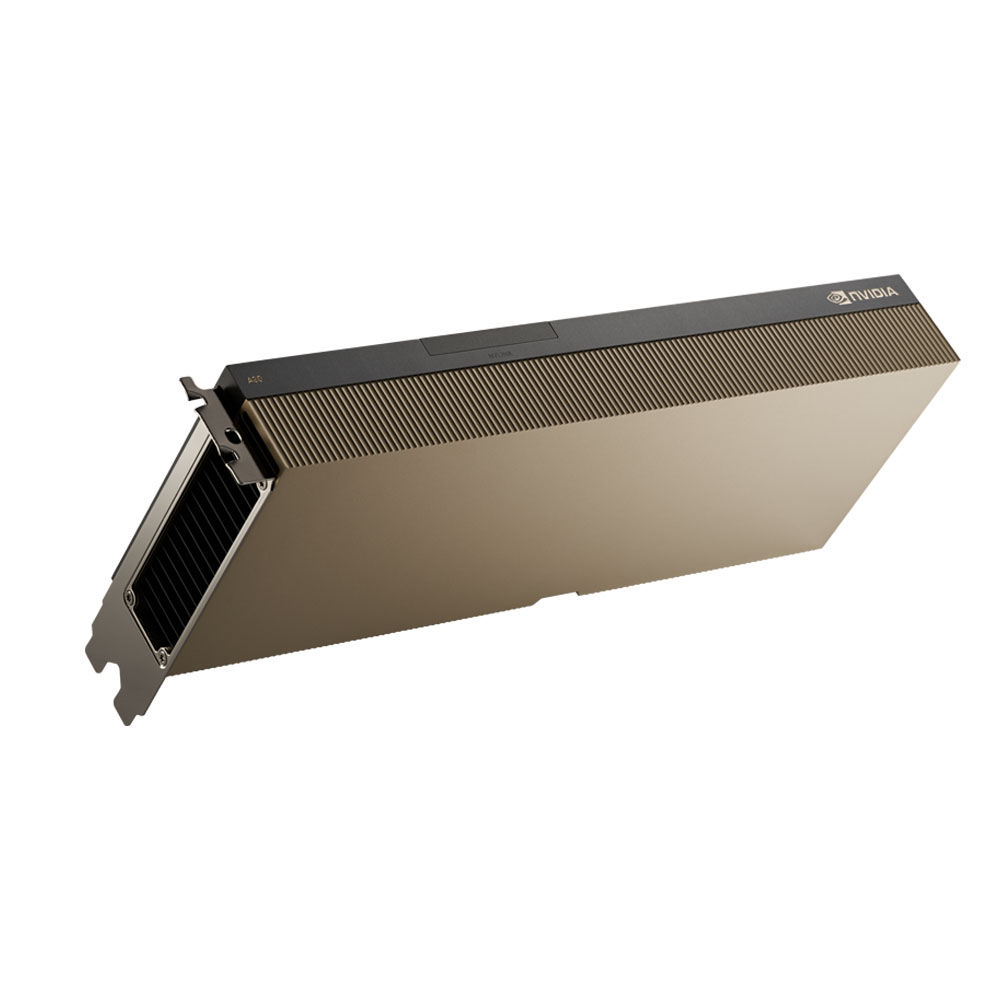

NVIDIA A30X Graphics Card 24 GB

The NVIDIA A30X Graphics Card with 24GB memory is a high-performance solution designed for AI, HPC, and data analytics. Built on the NVIDIA Ampere architecture, it features advanced Tensor Cores and multi-instance GPU (MIG) capabilities, enabling efficient AI training, inference, and analytics. Ideal for data centers and research labs, the A30X delivers exceptional scalability, energy efficiency, and compute power.

NVIDIA PNY Tesla A30 24GB GRAPHICS ACCELERATOR A30 PCIE Retail SCB NVA30TCGPU-KIT

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹570,000 ₹975,000

The A30X is a professional graphics card by NVIDIA, launched on April 12th, 2021. Built on the 7 nm process, and based on the GA100 graphics processor, the card does not support DirectX. Since A30X does not support DirectX 11 or DirectX 12, it might not be able to run all the latest games. The GA100 graphics processor is a large chip with a die area of 826 mm² and 54,200 million transistors. Unlike the fully unlocked DRIVE A100 PROD, which uses the same GPU but has all 6912 shaders enabled, NVIDIA has disabled some shading units on the A30X to reach the product’s target shader count. It features 3584 shading units, 224 texture mapping units, and 96 ROPs. Also included are 224 tensor cores which help improve the speed of machine learning applications. NVIDIA has paired 24 GB HBM2e memory with the A30X, which are connected using a 3072-bit memory interface. The GPU is operating at a frequency of 1035 MHz, which can be boosted up to 1440 MHz, memory is running at 1593 MHz.

Being a dual-slot card, the NVIDIA A30X draws power from 1x 16-pin power connector, with power draw rated at 230 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. A30X is connected to the rest of the system using a PCI-Express 4.0 x8 interface. The card measures 267 mm in length, 112 mm in width, and features a dual-slot cooling solution.

Key Features:

- Ampere Architecture

- CUDA Cores

- The A30X features a high number of CUDA Cores (exact count varies by model), providing parallel processing power crucial for data processing, HPC simulations, and AI training.

- Second-Generation RT Cores

- Real-time ray tracing is supported for advanced rendering tasks and hardware-accelerated lighting calculations in professional visualization applications.

- Third-Generation Tensor Cores

- Improves AI operations—machine learning model training, inference, and neural network acceleration—offering higher throughput for HPC and data analytics.

- 24GB of High-Bandwidth Memory

- Generous VRAM

- With 24GB of GPU memory, the A30X tackles memory-intensive workflows like large AI models, complex 3D scenes, or multi-4K video streams without hitting performance bottlenecks.

- ECC Support

- Error-Correcting Code (ECC) helps maintain data integrity for mission-critical tasks, reducing errors in long-running computations or AI workflows.

- AI and Data Science Acceleration

- Tensor Core Enhancements

- Capable of mixed-precision computing (FP16, BF16, etc.), speeding up deep learning training and inference for advanced neural networks.

- NVIDIA AI Software Ecosystem

- Compatible with CUDA-X AI, NVIDIA’s software stack for data science and machine learning, enabling streamlined development, training, and deployment.

- High-Performance Computing (HPC)

- Double-Precision Performance

- Offers powerful FP64 throughput for scientific applications like fluid dynamics, molecular simulations, and astrophysical computations.

- Multi-GPU Scaling

- Some A-series GPUs support NVLink, enabling multiple GPUs to share memory and compute capacity for large-scale HPC clusters (availability of NVLink on “A30X” may depend on final product design).

- Professional Visualization and Rendering

- Real-Time Ray Tracing

- Second-gen RT Cores deliver advanced rendering for complex models and immersive VR environments in design, architectural, or media workflows.

- Large Multi-Display or 8K Output

- Provided drivers and hardware outputs (where applicable) support multiple high-resolution displays for design reviews, control rooms, or collaborative data visualization.

- Enterprise-Grade Reliability

- 24/7 Operation

- Engineered for round-the-clock usage, crucial for HPC data centers, AI labs, or design studios requiring continuous GPU acceleration.

- Thermal and Power Optimizations

- Typically includes robust thermal designs or is integrated into server enclosures with optimized airflow, maintaining stable performance under heavy loads.

- NVIDIA Software Stack and Management

- NVIDIA NGC

- Access containers, AI frameworks, and HPC software from the NVIDIA NGC catalog, simplifying environment setup and deployment.

- Enterprise Driver Support

- Long lifecycle and stable release branch drivers ensure reliability for mission-critical or large-scale production environments.

Applications:

- AI and Machine Learning

- Deep Learning Training & Inference: Third-gen Tensor Cores accelerate neural networks used in NLP, computer vision, and recommendation systems.

- Data Analytics: Parallel processing speeds up data wrangling, real-time analytics, and model building for big data solutions.

- High-Performance Computing (HPC)

- Scientific Simulations: Simulate complex phenomena in areas like molecular dynamics, climate modeling, or fluid dynamics with faster GPU-accelerated computations.

- Research Labs: Universities, government institutions, or private R&D benefit from accelerated HPC tasks, reducing time to results.

- Professional Visualization

- Rendering Farms: Real-time ray tracing for photorealistic design or architectural rendering, enabling rapid prototyping and design iteration.

- Media & Entertainment: Speeds up 8K video editing, color grading, and visual effects in post-production workflows.

- Data Center & Cloud

- Virtualized GPU: Some A-series GPUs support vGPU technology, allowing multiple users or containerized tasks to share GPU resources in a data center environment.

- Cloud Services: Third-party providers can offer GPU-accelerated services for HPC, AI, or 3D rendering on demand.

- Edge Computing

- Compact HPC Deployments: Deployed in edge servers to handle AI inference, real-time analytics, or rendering tasks closer to data sources.

Why Choose the NVIDIA A30X Graphics Card 24 GB?

- Robust Compute Power

- Combines a large number of CUDA, Tensor, and RT Cores for HPC, AI, and advanced rendering tasks, achieving high throughput and real-time performance.

- 24GB of Memory for Complex Workloads

- Adequate VRAM for handling massive AI models, 3D datasets, or multiple 4K/8K video streams concurrently without memory congestion.

- AI & Ray Tracing Acceleration

- Delivers second-generation RT Cores for realistic visuals and third-generation Tensor Cores for efficient deep learning, bridging HPC with advanced GPU rendering.

- Data Center-Ready Reliability

- Suited for continuous 24/7 operation, offering a stable hardware base for mission-critical tasks in HPC, design, or production pipelines.

- NVIDIA Ecosystem Integration

- Full support for NVIDIA’s HPC, AI, and enterprise software stacks, plus driver releases ensuring security, performance, and stability over time.

| Product Name | NVIDIA A30X |

| Manufacturer | NVIDIA |

| Base Clock | 1.44 GHz |

| Boost Clock | 1.71 GHz |

| Memory | 24 GB GDDR6 |

| Memory Interface | 384-bit |

| Memory Bandwidth | 900 GB/s |

| TDP (Thermal Design Power) | 300W |

| Power Connector | 2 x 8-pin PCIe Power Connectors |

| PCIe Interface | PCIe Gen 4.0 x16 |

| Display Outputs | 4 x DisplayPort 1.4a |

| Maximum Resolution | 7680 x 4320 (8K) |

| Dimensions | 267 mm (L) x 112 mm (H) x 40 mm (W) |

| Recommended PSU | 750W |