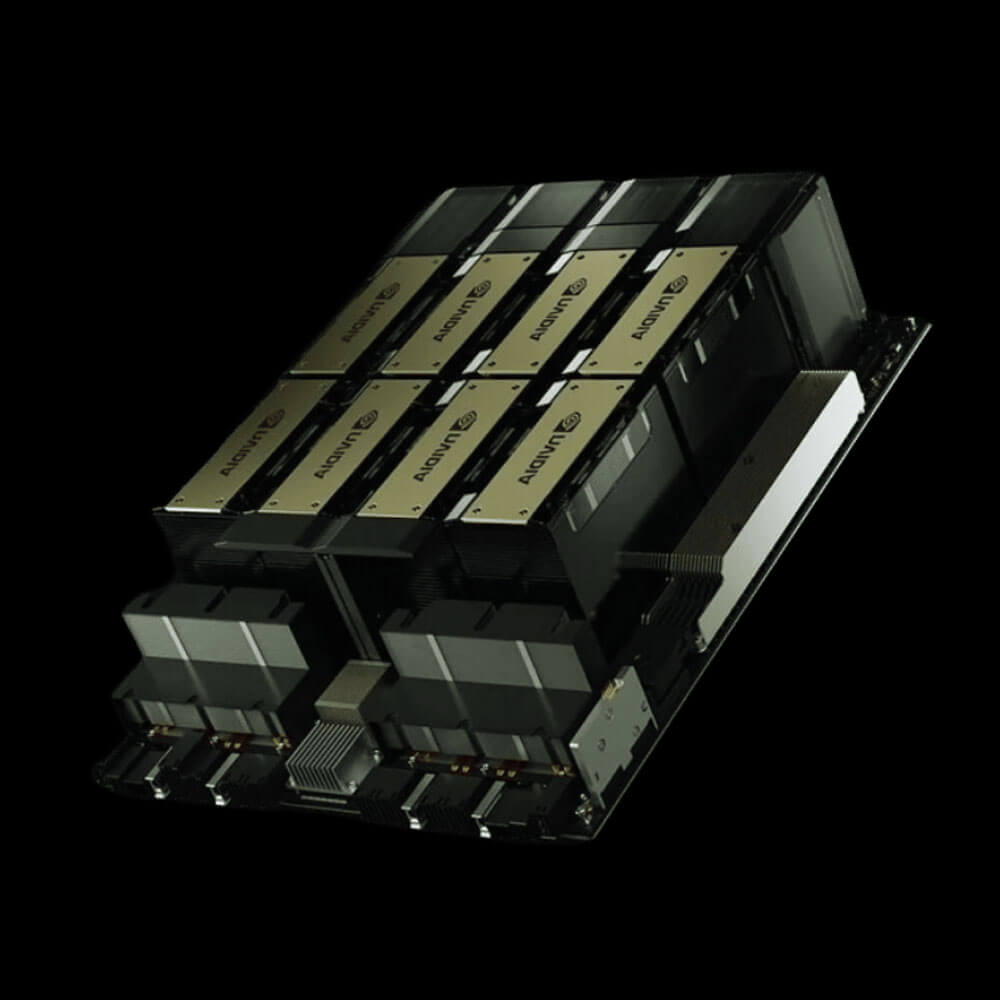

NVIDIA B100 Blackwell AI GPU 192GB 700W

The NVIDIA B100 Blackwell AI GPU is a next-generation accelerator built for large-scale AI, deep learning, and HPC workloads. Featuring 192GB of HBM3e memory, over 8TB/s bandwidth, and advanced Tensor Cores with Transformer Engine, the B100 delivers unmatched performance for training massive AI models and running real-time inference. With support for NVLink 5.0, PCIe Gen5, and enterprise-grade power efficiency at 700W, it sets a new standard for data center AI infrastructure

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹559,999 ₹800,000

The NVIDIA B100 Blackwell AI GPU is a state-of-the-art processor purpose-built for next-generation artificial intelligence (AI) and high-performance computing (HPC). Leveraging NVIDIA’s cutting-edge Blackwell architecture, the B100 is engineered to deliver unprecedented performance in large-scale AI model training, inference, and data-centric workloads with remarkable energy efficiency.

With 192GB of unified high-bandwidth memory and a thermal design power (TDP) of 700W, the B100 offers extreme computational throughput, advanced interconnects, and a powerful AI acceleration framework—setting a new standard for AI infrastructure and enterprise computing.

Key Features:

- NVIDIA Blackwell Next-Gen Architecture

-

- Built on NVIDIA’s most advanced architectural platform, the B100 delivers exceptional parallelism, deep learning acceleration, and scalability for the most demanding AI and scientific applications.

- 192GB Unified High-Bandwidth Memory (HBM3e)

- Massive Model Support: Easily runs the largest transformer models and AI workloads.

- High-Speed Access: Eliminates memory bottlenecks to accelerate both training and inference tasks.

- AI-Optimized Tensor Processing Units

- Next-Gen Tensor Cores: Designed for ultra-fast matrix computations, powering models like GPT, BERT, and large vision transformers.

- Optional Ray Tracing Cores: Ideal for advanced simulation, scientific visualization, and real-time 3D rendering.

- High-Speed GPU Interconnect

- NVLink 5.0: Facilitates direct high-bandwidth communication between GPUs in multi-GPU configurations.

- PCIe Gen5 Interface: Provides maximum system bandwidth for optimal data movement and I/O operations.

Applications:

- Artificial Intelligence & Machine Learning

-

- Scalable training of large language models and generative AI systems.

- Low-latency inference for cloud and edge deployment.

- Research, experimentation, and rapid prototyping of next-gen AI frameworks.

- High-Performance Computing (HPC)

-

- Accelerated simulations in domains like quantum physics, fluid dynamics, and computational chemistry.

- Genomics and medical imaging processing pipelines.

- Structural, seismic, and renewable energy modeling in engineering.

- Visualization & Digital Content Creation

-

- CAD and digital twin applications with real-time rendering capabilities.

- Virtual production environments requiring high memory and compute resources.

- Photorealistic rendering and scientific visualization powered by ray tracing.

Why Choose NVIDIA B100 Blackwell AI GPU?

- Purpose-Built for AI & HPC at Scale

-

- Architected to meet the extreme demands of AI supercomputing and industrial simulation.

- 192GB of Unified Memory

-

- Industry-leading memory capacity for handling the most complex models and data pipelines without compromise.

- Extreme Parallel Performance

-

- Delivers unrivaled compute density and throughput, ideal for multi-GPU clusters and distributed AI workloads.

| Model | NVIDIA B100 Blackwell |

| Architecture | NVIDIA Blackwell (Next-Gen) |

| GPU Type | Data Center / AI Accelerator |

| TDP (Thermal Design Power) | 700W |

| Tensor Cores | 5th Generation Tensor Cores with Transformer Engine |

| Memory Capacity | 192GB HBM3e (Unified) |

| Memory Type | HBM3e |

| Memory Bandwidth | Over 8 TB/s |

| PCIe Interface | PCIe Gen 5.0 |

| NVLink | NVLink 5.0, up to 1.8 TB/s GPU-to-GPU bandwidth |