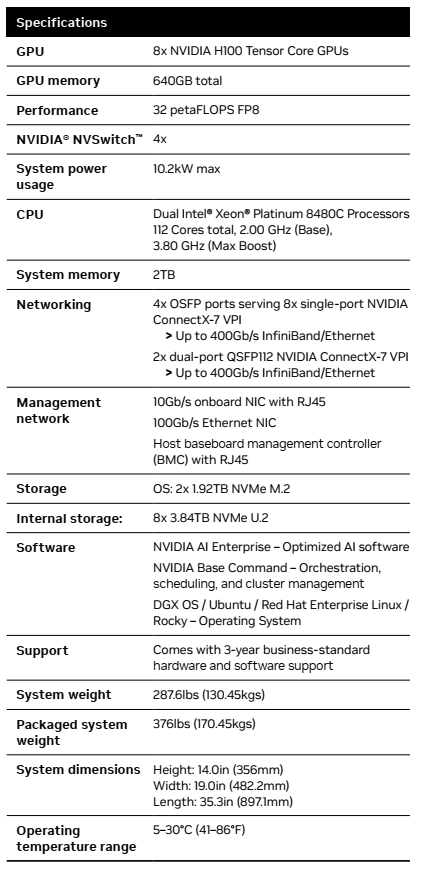

NVIDIA DGX H100 System for Education – P4387 – 640 GB Full – Standard Support – 3 Yr – DGXH-G640F+P2EDI36

NVIDIA DGX H100 System is a high-performance AI system featuring 8× NVIDIA H100 Tensor Core GPUs, delivering up to 32 peta FLOPS of FP8 compute. Designed for large-scale AI training, inference, and analytics, it integrates ultra-fast NVLink/NVSwitch interconnects, dual Intel Xeon Platinum CPUs, 2 TB system memory, high-speed NVMe storage, and 400 Gb/s networking—providing a turnkey platform for enterprise AI infrastructure.

NVIDIA DGX H100 System for Education – P4387 – 640 GB Full – Standard Support – 3 Yr – DGXH-G640F+P2EDI36

Min. Quantity – 1 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

NVIDIA DGX H100 is an advanced, enterprise-grade AI system engineered to deliver unprecedented performance for training, inference, and analytics at scale. Powered by eight NVIDIA H100 Tensor Core GPUs with 640 GB of HBM3 memory and interconnected through four NVIDIA NVSwitches for up to 7.2 TB/s GPU-to-GPU bandwidth, it provides the massive compute power required for today’s most demanding AI workloads, including large language models, generative AI, and high-performance data analytics.

The system is driven by dual Intel Xeon Platinum 8480C CPUs with 2 TB of DDR5 system memory and is equipped with 10× NVIDIA ConnectX-7 400 Gb/s network adapters plus two BlueField-3 DPUs for accelerated networking, storage, and security offloads. Storage is handled by 2× 1.92 TB NVMe M.2 SSDs (RAID-1) for the OS and ~30 TB NVMe U.2 SSDs (RAID-0) for high-speed data access.

Packaged in an 8U rackmount form factor with a peak power draw of around 10.2 kW, the DGX H100 ships with NVIDIA DGX OS, NVIDIA AI Enterprise, Base Command, and access to pretrained models and frameworks through NGC. It’s also fully supported by NVIDIA DGXperts, making it a turnkey platform for building, deploying, and scaling AI in the enterprise.