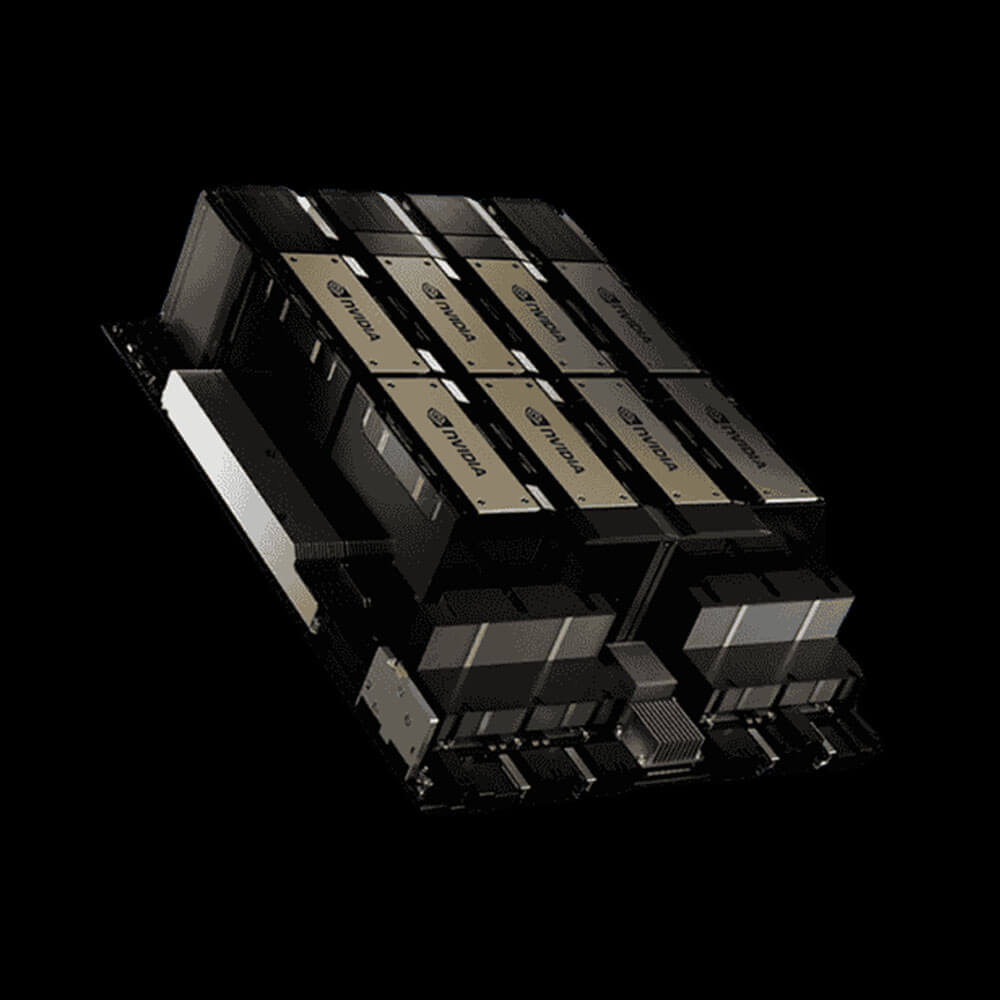

NVIDIA GPU Baseboard 8 H200 HBM3e

The NVIDIA GPU Baseboard with 8x H200 HBM3e GPUs is a high-performance compute platform designed for AI supercomputing, deep learning, and HPC workloads. Featuring eight NVIDIA H200 GPUs with a combined memory of over 1.1TB HBM3e and ultra-high bandwidth, this baseboard delivers exceptional throughput, scalability, and energy efficiency. With NVLink interconnect, PCIe Gen5 support, and next-gen Hopper architecture, it’s ideal for large-scale AI model training, inference, and data-intensive scientific simulations.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹559,999 ₹800,000

The NVIDIA GPU Baseboard with 8 H200 GPUs is a powerful, enterprise-grade compute platform engineered for large-scale AI, machine learning, and high-performance computing (HPC) workloads. Featuring eight NVIDIA H200 GPUs, each equipped with HBM3e high-bandwidth memory, this baseboard delivers unparalleled compute density, memory throughput, and interconnect performance for modern data centers.

Built on NVIDIA’s advanced Hopper architecture, the system supports end-to-end acceleration of transformer-based models, scientific simulations, and real-time inference pipelines—making it a cornerstone for AI supercomputing environments.

Key Features:

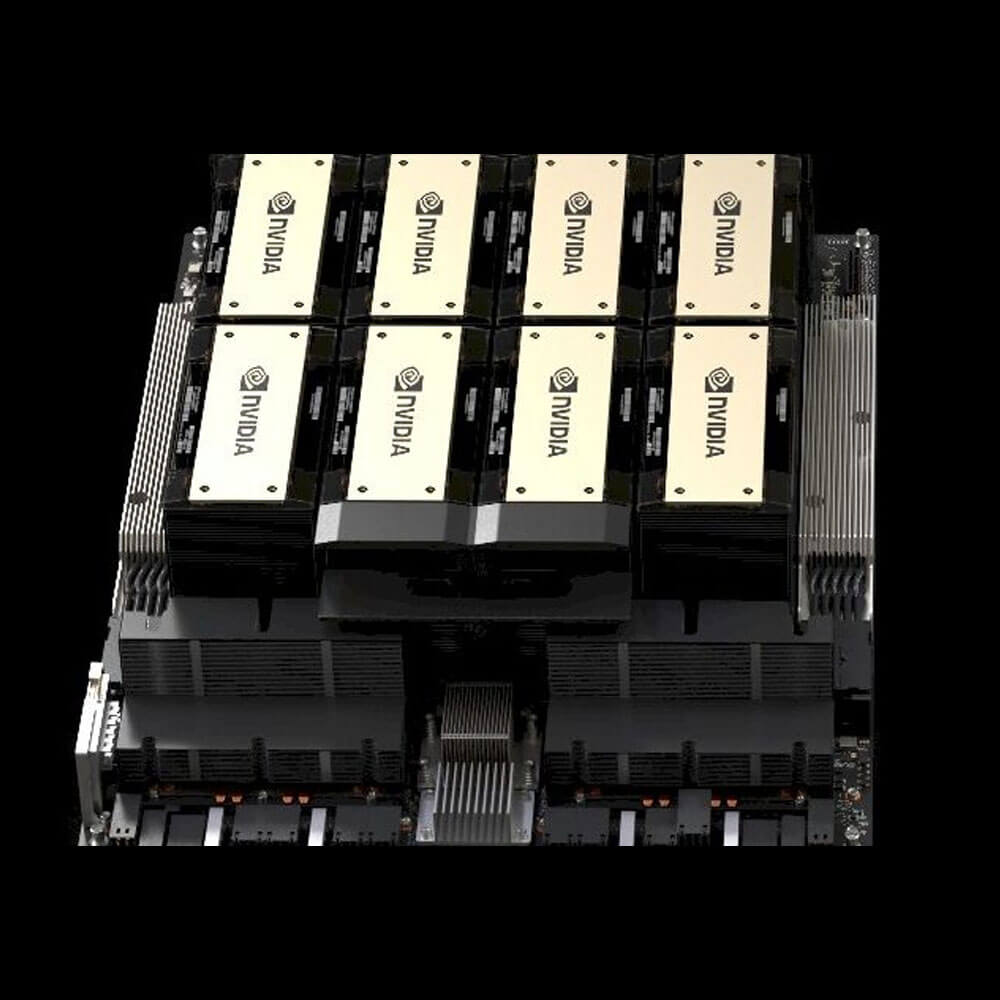

- 8x NVIDIA H200 GPUs

-

- Powered by next-gen Hopper architecture, delivering exceptional AI and HPC performance with native FP8 and Tensor Core acceleration.

- Each GPU with 141GB HBM3e (Total ~1.13TB)

- Massive unified memory enables training and deployment of large AI models with unprecedented efficiency and speed.

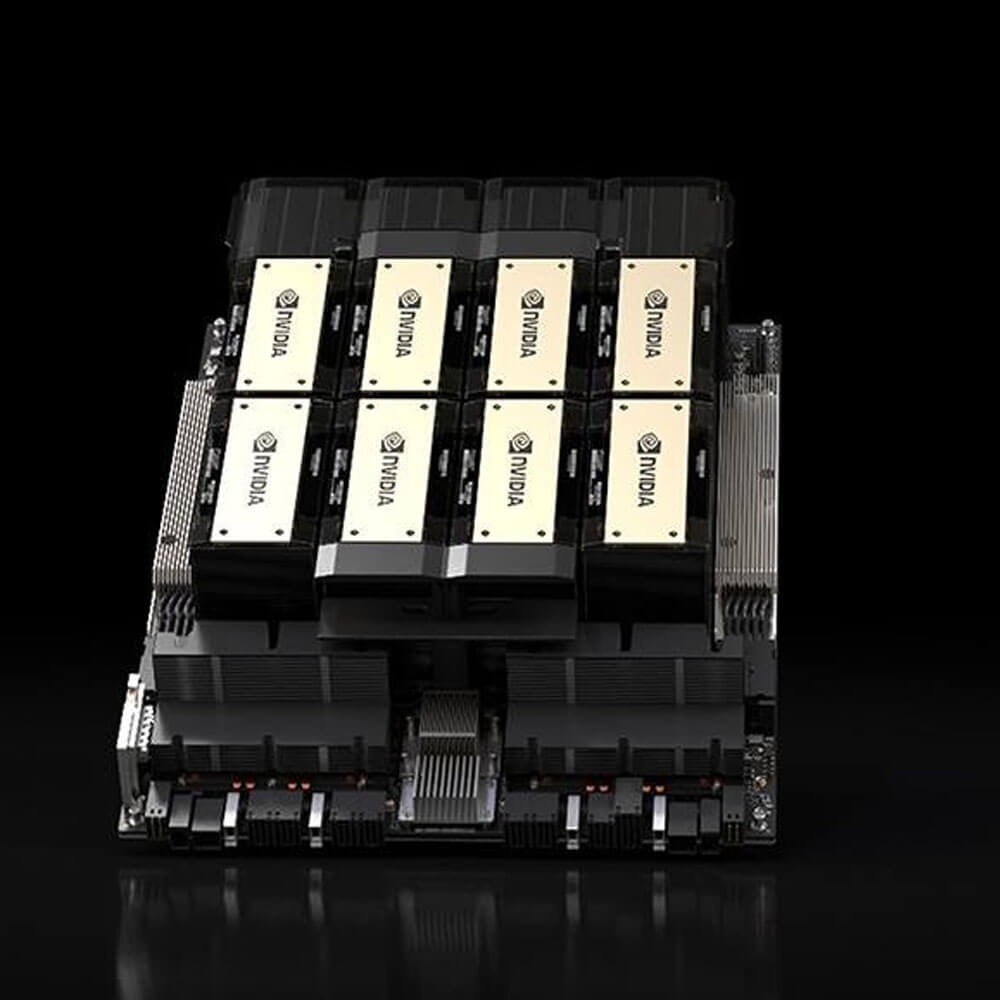

- Extreme Bandwidth

- Each GPU provides over 4.8TB/s memory bandwidth, enabling rapid data access and reducing latency during model execution.

- Advanced Interconnect Fabric (NVLink)

- High-speed NVLink enables low-latency GPU-to-GPU communication across the baseboard for seamless scalability and distributed workloads.

Applications:

- Artificial Intelligence & Deep Learning

-

- Training large-scale foundation and transformer models (LLMs, Diffusion, Vision Transformers).

- Accelerated inference for edge-to-cloud AI deployments.

- Generative AI, computer vision, and natural language processing.

- High-Performance Computing (HPC)

-

- Scientific simulations in fluid dynamics, quantum mechanics, climate modeling.

- Genomic sequencing and biomedical research.

- Structural and seismic analysis in engineering.

- Enterprise & Research AI Infrastructure

-

- AI supercomputers and cloud hyperscalers.

- University research clusters and innovation hubs.

- Real-time analytics and digital twin platforms.

Why Choose NVIDIA T400 Enterprise 4GB?

- Maximum Compute Density

-

- Consolidates massive AI power into a single, scalable baseboard platform.

- Next-Level Memory & Bandwidth

-

- HBM3e memory with ultra-fast access supports data-intensive and memory-bound applications.

- Optimized for Multi-GPU Scaling

-

- Built with NVLink and NVSwitch for seamless GPU collaboration across large clusters.

| GPU Model | 8x NVIDIA H200 (Hopper Architecture) |

| Memory (Per GPU) | 141GB HBM3e |

| Total Memory | ~1.13TB HBM3e |

| Memory Bandwidth | Over 4.8TB/s (aggregate) |

| Tensor Core Support | 4th Gen Tensor Cores with FP8, BF16, FP16, TF32 |

| Interconnect | NVLink 4.0, NVSwitch enabled |

| PCIe Interface | PCIe Gen5 |

| Power Requirement | ~7,000W |

| Display Support | Up to 4 simultaneous displays |