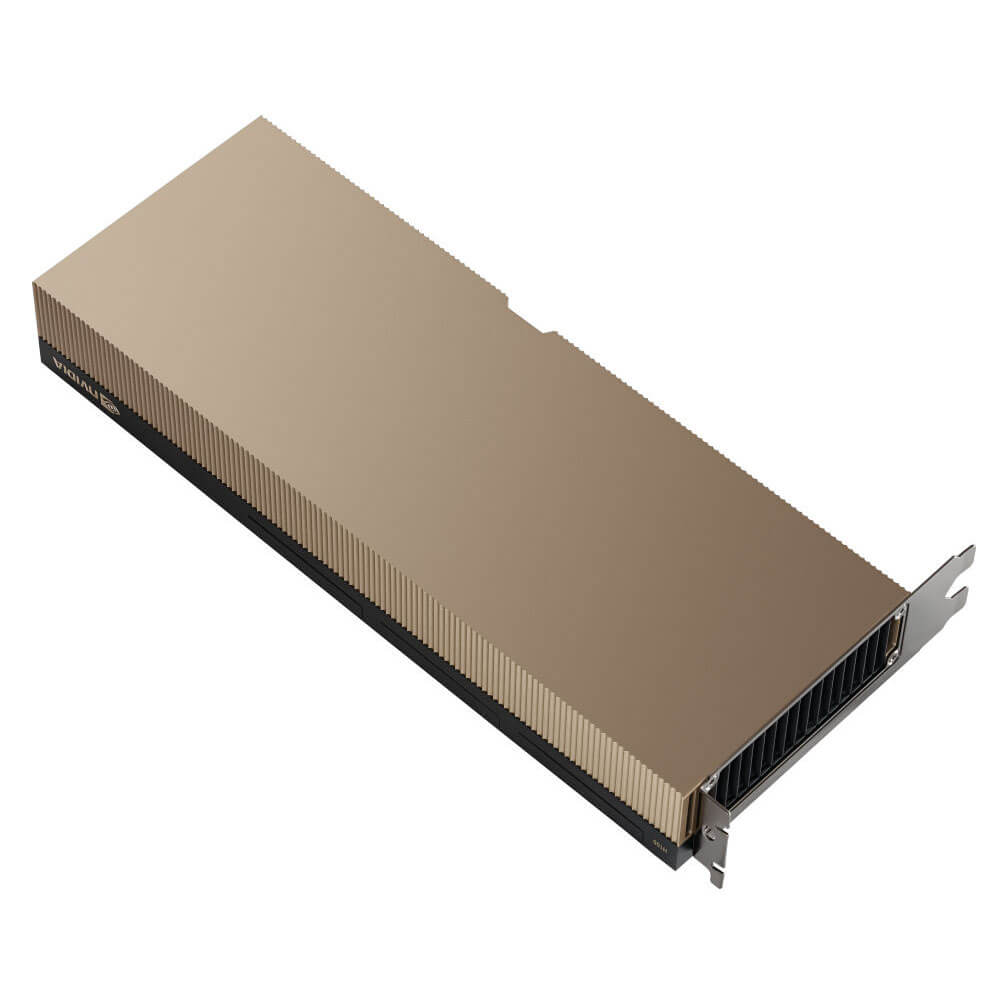

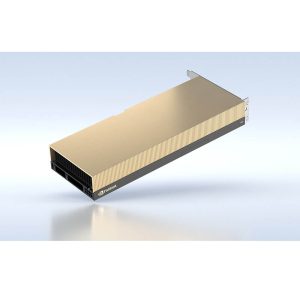

NVIDIA H100 PCIe Graphics Card 96 GB

The NVIDIA H100 PCIe Graphics Card with 96GB memory is a powerhouse designed for AI, HPC, and data analytics. Built on the NVIDIA Hopper architecture, it features advanced Tensor Cores, multi-instance GPU (MIG) technology, and FP8 precision for unmatched efficiency in AI training and inference. Ideal for data centers, the H100 delivers exceptional scalability, performance, and energy efficiency.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹150,000 ₹290,000

The H100 PCIe 96 GB is a professional graphics card by NVIDIA, launched on March 21st, 2023. Built on the 5 nm process, and based on the GH100 graphics processor, the card does not support DirectX. Since H100 PCIe 96 GB does not support DirectX 11 or DirectX 12, it might not be able to run all the latest games. The GH100 graphics processor is a large chip with a die area of 814 mm² and 80,000 million transistors. It features 16896 shading units, 528 texture mapping units, and 24 ROPs. Also included are 528 tensor cores which help improve the speed of machine learning applications. NVIDIA has paired 96 GB HBM3 memory with the H100 PCIe 96 GB, which are connected using a 5120-bit memory interface. The GPU is operating at a frequency of 1665 MHz, which can be boosted up to 1837 MHz, memory is running at 1313 MHz.

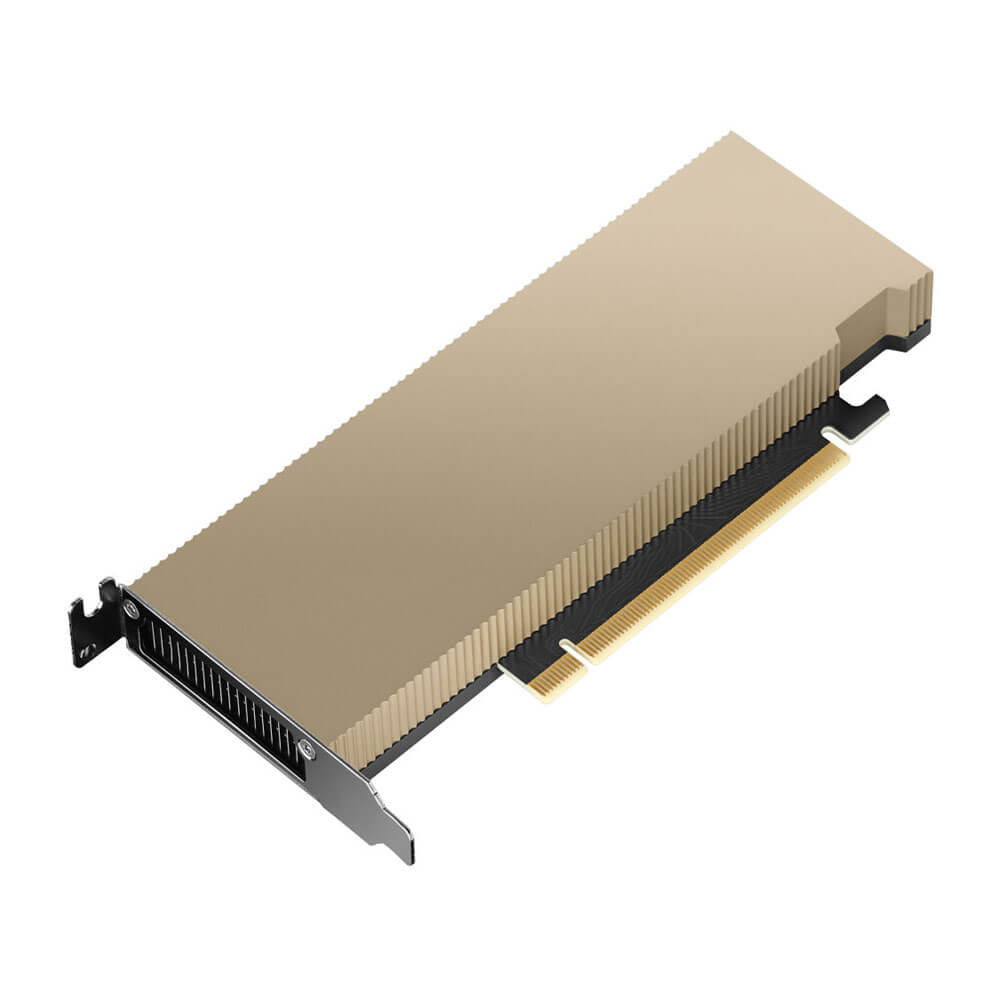

Being a dual-slot card, the NVIDIA H100 PCIe 96 GB draws power from an 8-pin EPS power connector, with power draw rated at 700 W maximum. This device has no display connectivity, as it is not designed to have monitors connected to it. H100 PCIe 96 GB is connected to the rest of the system using a PCI-Express 5.0 x16 interface. The card measures 268 mm in length, 111 mm in width, and features a dual-slot cooling solution.

Key Features:

- Hopper Architecture for Advanced Workloads

- CUDA Cores

- The H100 boasts 18,432 CUDA cores, providing unparalleled parallel processing power for compute-intensive tasks, including AI model training, simulations, and data analytics.

- 4th-Generation Tensor Cores

- Delivers up to 6x faster AI performance than its predecessors, optimized for mixed-precision computations such as FP8, FP16, BFLOAT16, and INT8, accelerating AI and ML workflows.

- Transformer Engine

- Built for next-generation transformer-based AI models, this feature significantly improves performance for natural language processing (NLP), generative AI, and recommendation systems.

- 96 GB HBM3 Memory

- Massive Memory Capacity

- The 96 GB of HBM3 memory is perfect for handling large datasets, AI models, and memory-intensive workloads like 3D simulations, data analytics, and video processing.

- Industry-Leading Bandwidth

- With up to 5 TB/s of memory bandwidth, the H100 ensures rapid data access for high-resolution simulations and real-time processing.

- Enterprise-Grade Scalability

- NVLink and NVSwitch Support

- Connects multiple H100 GPUs for scalable compute power and unified memory access, making it ideal for large-scale AI training and HPC clusters.

- Multi-Instance GPU (MIG)

- Splits the H100 into up to 7 secure GPU instances, allowing multiple users or tasks to run concurrently with isolated resources.

- Advanced AI Acceleration

- NVIDIA AI Enterprise Software Suite

- Provides optimized tools for AI training, inferencing, and deployment, ensuring seamless integration with popular frameworks like TensorFlow, PyTorch, and ONNX.

- TensorRT Optimization

- Enhances real-time AI inferencing with reduced latency and higher throughput.

- Energy Efficiency

- Optimized Power Efficiency

- Delivers industry-leading performance per watt, reducing energy costs while maintaining peak output.

- Passive Cooling Design

- Engineered for rack-mounted servers and dense data center deployments, ensuring reliable operation under sustained workloads.

- High-Speed Connectivity

- PCIe 5.0 Interface

- Provides high-speed data transfer for bandwidth-intensive tasks, ensuring low latency and optimal performance.

- Support for 8-way GPU Configurations

- Enables massive parallel computing power for advanced applications.

Applications:

- Artificial Intelligence and Machine Learning

-

- AI Training: Handles large-scale training for models like GPT, BERT, and generative adversarial networks (GANs) with unmatched speed and accuracy.

- AI Inference: Powers real-time inferencing for applications like recommendation systems, speech recognition, and autonomous systems.

- Generative AI: Accelerates models for creating text, images, and other media in real time.

- High-Performance Computing (HPC)

-

- Scientific Simulations: Speeds up simulations in climate science, genomics, astrophysics, and material engineering.

- Numerical Analysis: Optimized for precision and performance in engineering and scientific research.

- Data Analytics and Visualization

-

- Big Data Processing: Efficiently analyzes massive datasets, driving insights in industries like finance, healthcare, and retail.

- Data Visualization: Powers high-resolution visualizations for engineering, architecture, and scientific research.

- Media and Entertainment

-

- 3D Rendering and VFX: Delivers real-time ray tracing and accelerated rendering for animation, gaming, and VFX production.

- High-Resolution Video Processing: Handles 8K video editing, transcoding, and live streaming workflows.

- Cloud and Virtualization

-

- Virtualized Workloads: Enhances GPU-accelerated virtual desktop infrastructure (VDI) for creative and engineering applications.

- AI as a Service: Scales AI applications in cloud platforms, enabling real-time AI-powered solutions.

Why Choose the NVIDIA H100 PCIe Graphics Card 96 GB?

- Industry-Leading AI Performance

-

- With 4th-gen Tensor Cores, the Transformer Engine, and 96 GB of HBM3 memory, the H100 offers unmatched performance for AI and ML workloads.

- Massive Memory Bandwidth

-

- The 3.5 TB/s bandwidth ensures fast, efficient data access for the most demanding applications, including AI training and HPC simulations.

- Scalability for Enterprise Environments

-

- Supports NVLink and NVSwitch for multi-GPU configurations, making it ideal for scaling compute power and memory across data centers.

- Energy Efficiency

-

- Optimized for performance-per-watt, reducing operational costs for enterprises and data centers while delivering exceptional output.

- Enterprise-Grade Reliability

-

- Backed by NVIDIA’s certified drivers and software suite, ensuring stability, compatibility, and long-term support for critical applications.

- Versatile Applications

-

- The H100 excels across industries, from AI research and HPC to media production and big data analytics, making it the most versatile GPU for modern workloads.

| Product Name | NVIDIA H100 PCIe |

| Manufacturer | NVIDIA |

| Memory | 96 GB |

| Memory Bus | 5120 bit |

| Bandwidth | 3.36 TB/s |

| Base Clock | 1665 MHz |

| Boost Clock | 1837 MHz |

| TDP | 700 W |

| PSU | 1100 W |

| Power Connectors | 8-pin EPS |

| Bus Interface | PCIe 5.0 x16 |

| Max Displays | 4 (Via Virtual GPU Features) |

| Dimensions | 10.5 inches (L) x 4.4 inches (H) x Dual-Slot |