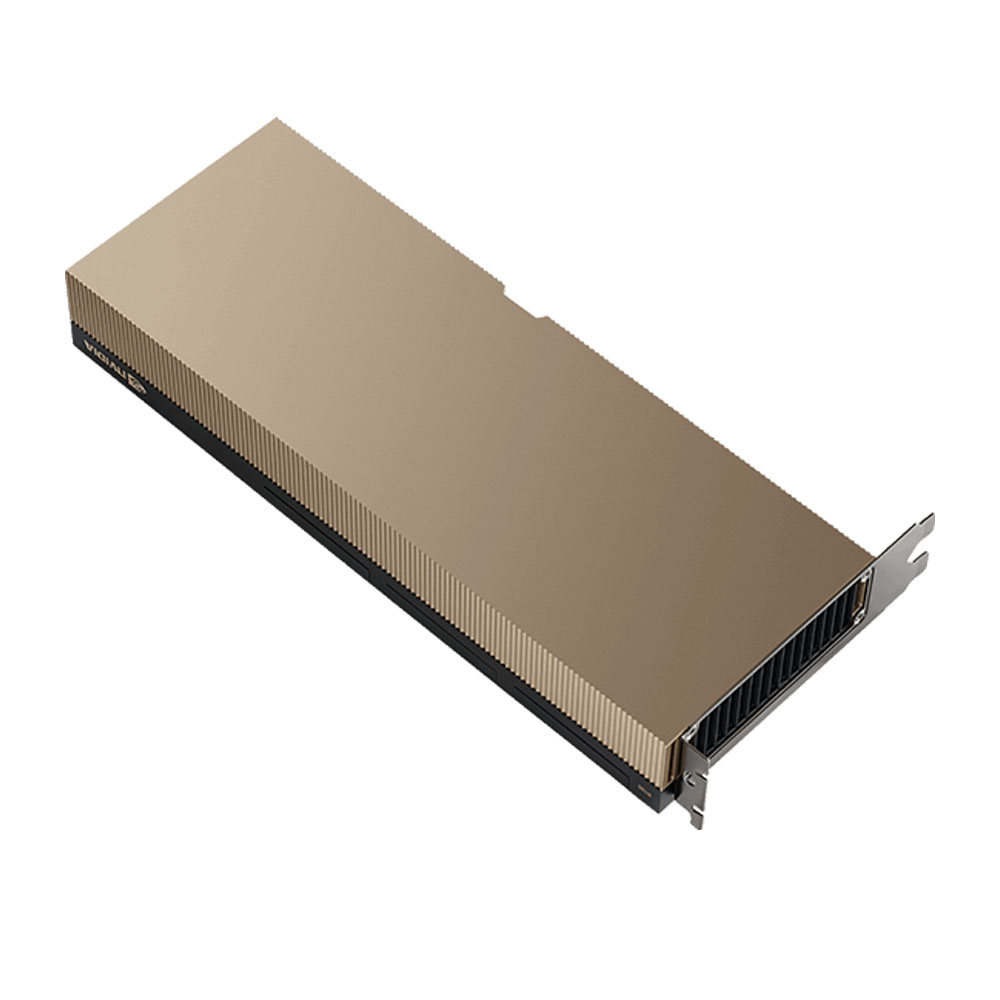

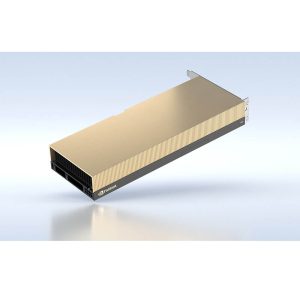

NVIDIA H200 NVL

The NVIDIA H200 NVL is a dual-GPU, data center–class solution built on the Hopper architecture, optimized for AI, high-performance computing, and generative AI workloads. Featuring 141GB of HBM3 memory per GPU (282GB total) and 4.8TB/s memory bandwidth, it’s engineered to handle massive datasets, large language models, and advanced simulations with ease. With NVLink connectivity delivering 900GB/s GPU-to-GPU bandwidth and integrated Transformer Engine technology, the H200 NVL delivers exceptional scalability, speed, and efficiency for next-generation AI training, inference, and scientific computing at enterprise and hyperscale levels.

NVIDIA H200 NVL (SKU: NVH200NVLTCGPU-KIT-1)

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹659,999 ₹900,000

The NVIDIA H200 NVL is a cutting-edge, high-performance GPU platform designed to push the limits of AI, high-performance computing (HPC), and large-scale data analytics. Built on the advanced Hopper architecture, the H200 NVL is optimized for massive AI model training, generative AI workloads, and accelerated scientific simulations. It features 141GB of HBM3 memory per GPU and is delivered in a dual-GPU NVLink configuration, providing an aggregate of 282GB of ultra-fast memory. This immense capacity, combined with 4.8TB/s of memory bandwidth per GPU, ensures seamless handling of the largest datasets and most complex neural network models without memory bottlenecks.

Designed for maximum scalability, the NVIDIA H200 NVL leverages NVLink to provide 900GB/s of GPU-to-GPU bandwidth, enabling multi-GPU clusters to function as a single massive AI training engine. It includes Transformer Engine technology to accelerate large language model (LLM) workloads, delivering unmatched performance for generative AI applications such as ChatGPT, code generation, and multimodal AI. The H200 NVL is also highly energy-efficient, engineered to fit into modern data center infrastructure while delivering record-breaking throughput. Whether deployed in enterprise AI research labs, supercomputing environments, or hyperscale data centers, the NVIDIA H200 NVL represents the pinnacle of GPU technology for next-generation AI and HPC workloads.

NVIDIA H200 NVL, H200 NVL GPU, NVIDIA Hopper H200 NVL, H200 NVL AI GPU, H200 NVL HPC GPU, H200 NVL data center GPU, H200 NVL server GPU, H200 NVL dual GPU, H200 NVL 282GB HBM3