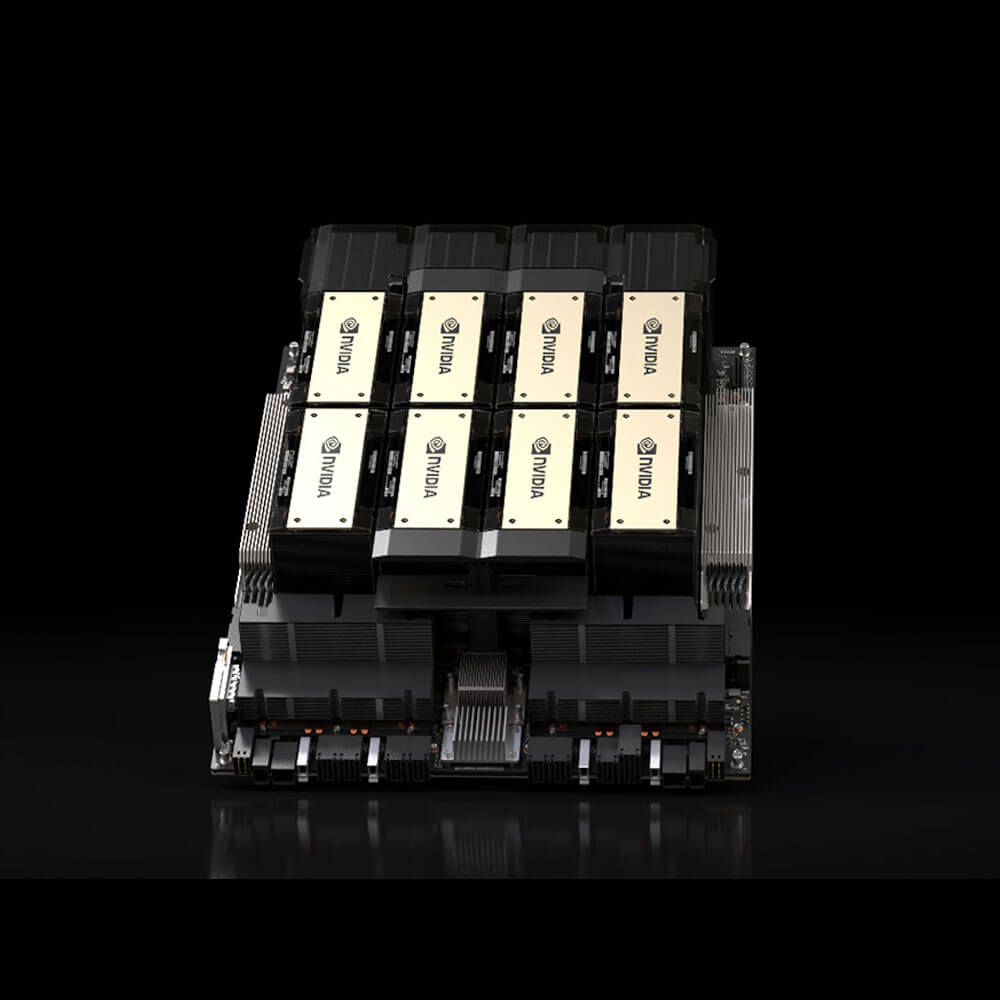

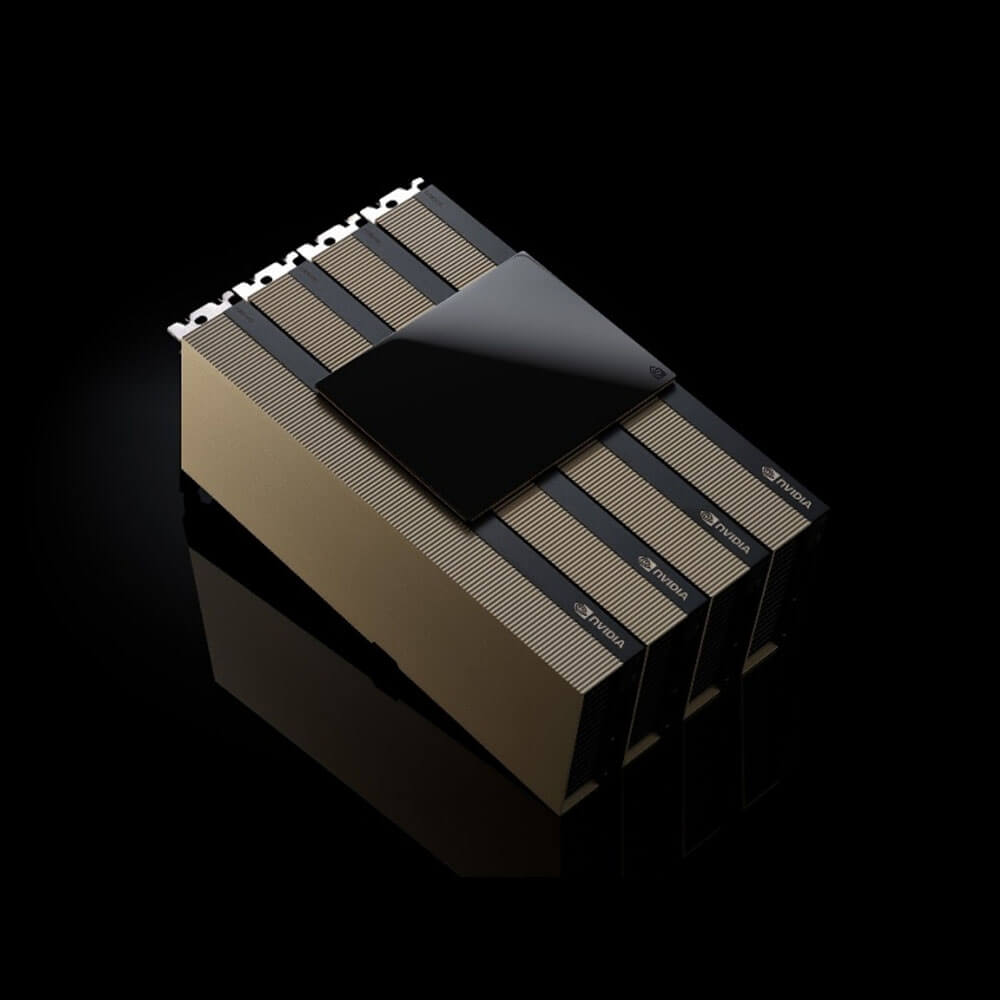

NVIDIA H200 Tensor Core Graphics Card 141 GB

The NVIDIA H200 Tensor Core Graphics Card with 141GB memory is a cutting-edge solution for AI, HPC, and advanced data analytics. Powered by the NVIDIA Hopper architecture, it features Tensor Cores, multi-instance GPU (MIG) technology, and FP8 precision for unparalleled efficiency. Ideal for data centers and enterprise applications, the H200 delivers exceptional scalability, energy efficiency, and groundbreaking performance for demanding workloads.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹109,999 ₹198,888

The NVIDIA H200 Tensor Core Graphics Card 141 GB is a state-of-the-art GPU engineered to deliver exceptional performance for artificial intelligence (AI), machine learning (ML), high-performance computing (HPC), and data analytics. Built on the NVIDIA Hopper architecture, the H200 Tensor Core GPU provides groundbreaking acceleration for complex workloads, making it an ideal solution for enterprise, scientific, and data-driven environments.

With 141 GB of HBM3 memory, 4th generation Tensor Cores, and advanced interconnect technologies, the H200 sets a new standard for scalability, efficiency, and power in AI model training, inference, and large-scale simulations.

Key Features:

- Hopper Architecture

- 4th Generation Tensor Cores

- Delivers unprecedented performance with FP8 precision and Transformer Engine acceleration, significantly speeding up AI model training and inferencing.

- Multi-Instance GPU (MIG)

- Allows the GPU to be partitioned into multiple instances, optimizing resource utilization for diverse workloads.

- Dynamic Programming Acceleration

- Enhances performance in HPC tasks, particularly in genomics, route optimization, and logistics.

- 141 GB HBM3 Memory

- High-Capacity Memory

- The 141 GB of HBM3 memory provides the bandwidth and capacity required for large datasets and complex AI models.

- Ultra-High Bandwidth

- Offers up to 35 TB/s of memory bandwidth, ensuring seamless performance for data-intensive applications.

- Error-Correcting Code (ECC)

- Maintains data integrity and reliability, making it suitable for mission-critical workloads.

- NVLink and NVSwitch Interconnect

- High-Speed Connectivity

- Supports 4th Generation NVLink, enabling seamless multi-GPU communication with up to 900 GB/s of bandwidth.

- Scalability

- NVSwitch technology allows for scaling across multiple GPUs, creating massive computational power for large-scale AI and HPC workloads.

- Enhanced AI Performance

- Transformer Engine

- Optimized for large language models (LLMs), generative AI, and Transformer-based architectures, enabling up to 6x speedups compared to traditional GPUs.

- FP8 Precision Support

- Achieves higher performance and efficiency with support for lower-precision formats without sacrificing accuracy.

- Energy Efficiency and Advanced Cooling

- Optimized Power Usage

- Operates with a focus on maximizing performance-per-watt efficiency.

- Advanced Cooling

- Designed for data center environments with support for liquid and air cooling options, ensuring stability during intensive workloads.

- NVIDIA AI and CUDA Ecosystem

- NVIDIA AI Stack

- Fully compatible with NVIDIA’s AI frameworks, including NVIDIA AI Enterprise, ensuring seamless deployment of AI workflows.

- CUDA Programming

- Supports the latest CUDA Toolkit, enabling developers to build high-performance applications across a wide range of domains.

Applications:

- Artificial Intelligence and Machine Learning

-

- AI Model Training: Accelerates training of large-scale neural networks, including LLMs, generative AI, and deep learning applications.

- AI Inference: Speeds up inferencing for real-time AI applications like recommendation systems, image recognition, and speech processing.

- Autonomous Systems: Powers AI models for robotics, autonomous vehicles, and smart city solutions.

- High-Performance Computing (HPC)

-

- Scientific Simulations: Excels in climate modeling, genomics research, fluid dynamics, and astrophysics simulations.

- Data Analytics: Handles massive datasets for financial modeling, research, and predictive analytics.

- Energy Exploration: Accelerates simulations in oil and gas exploration and renewable energy optimization.

- Generative AI

-

- Large Language Models (LLMs): Optimized for training and deployment of GPT-like models and Transformer architectures.

- Content Generation: Powers generative AI applications for media, entertainment, and marketing automation.

- Virtualization and Data Centers

-

- Cloud AI Services: Provides the computational backbone for AI-as-a-Service platforms.

- MIG Technology: Enables efficient multi-tenant environments, allowing multiple users to share GPU resources securely and effectively.

- Professional Visualization

-

- Design and Simulation: Powers CAD, CFD, and simulation workflows with real-time ray tracing and AI-enhanced rendering.

- Media Production: Accelerates video rendering, post-production, and VFX workflows.

Why Choose the NVIDIA H200 Tensor Core Graphics Card 141 GB?

- Unmatched AI Performance

-

- Optimized for AI model training and inference, with Transformer Engine acceleration and support for FP8 precision.

- Massive Memory Capacity

-

- The 141 GB HBM3 memory ensures smooth handling of large datasets, making it ideal for AI, HPC, and data analytics.

- Advanced Interconnect Technologies

-

- 4th Generation NVLink and NVSwitch enable seamless scaling for multi-GPU deployments, offering superior performance in distributed workloads.

- Future-Proof Architecture

-

- Built on NVIDIA’s latest Hopper architecture, ensuring compatibility with cutting-edge AI frameworks and tools.

- Scalable and Versatile

-

- MIG support and compatibility with virtualization technologies make it suitable for cloud-based AI and enterprise environments.

- Energy Efficiency and Reliability

-

- Designed for data centers, the H200 balances high performance with energy-efficient operation and robust thermal management.

- Seamless Ecosystem Integration

-

- Fully integrates with NVIDIA’s CUDA, AI Enterprise, and other software stacks, providing a comprehensive solution for developers and enterprises.

| Product Name | NVIDIA H200 Tensor Core |

| Manufacturer | NVIDIA |

| Memory | 141 GB HBM3 |

| Max Power Consumption | [Insert watts value, if available] |

| Bandwidth | [Insert bandwidth value, if available] |

| Form Factor | PCIe Gen 5 |