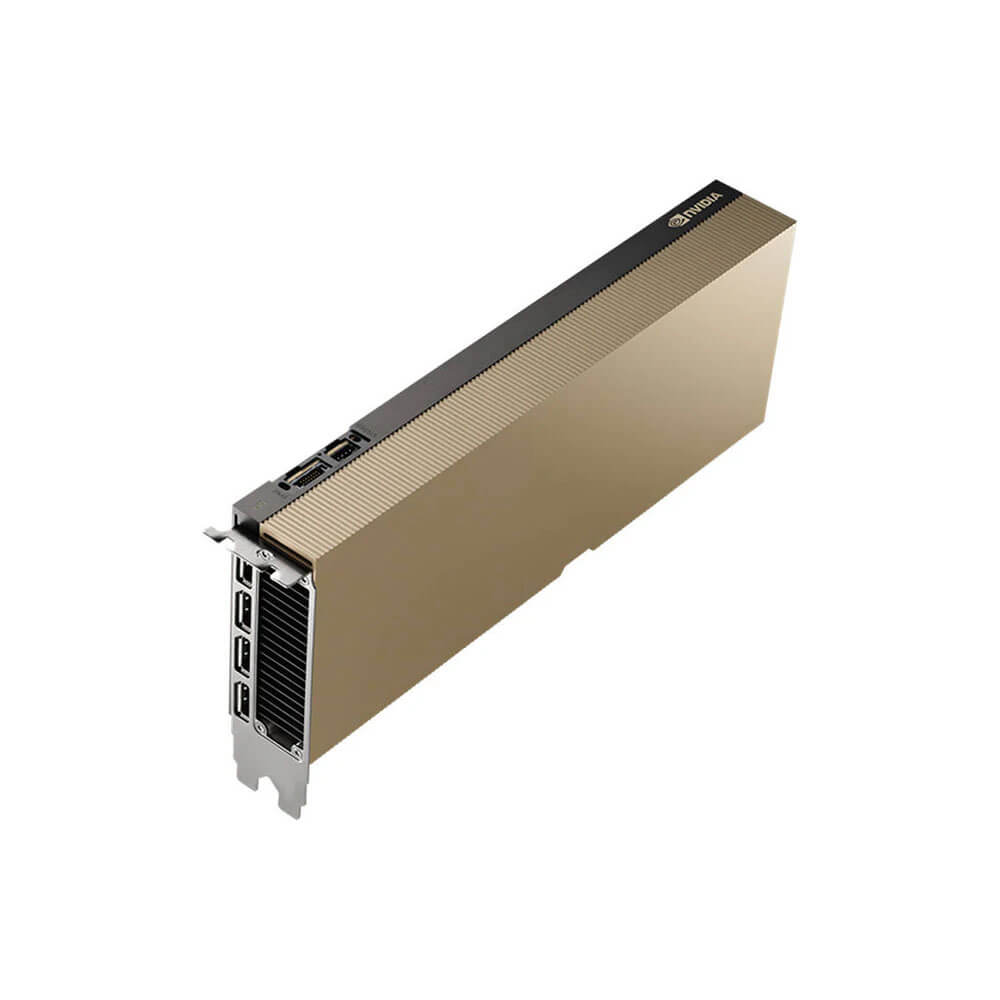

NVIDIA L40 CNX Graphics Card 24 GB

The NVIDIA L40 CNX Graphics Card with 24GB memory is tailored for AI, visualization, and data-intensive tasks. Powered by the NVIDIA Ada Lovelace architecture, it features advanced RT and Tensor Cores for real-time ray tracing and AI acceleration. Ideal for enterprise and cloud-based applications, the L40 CNX delivers exceptional performance, scalability, and efficiency for cutting-edge professional workflows.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹175,000 ₹205,000

The L40 CNX is a professional graphics card by NVIDIA, launched on October 13th, 2022. Built on the 5 nm process, and based on the AD102 graphics processor, in its AD102-???-A1 variant, the card supports DirectX 12 Ultimate. The AD102 graphics processor is a large chip with a die area of 609 mm² and 76,300 million transistors. Unlike the fully unlocked TITAN Ada, which uses the same GPU but has all 18432 shaders enabled, NVIDIA has disabled some shading units on the L40 CNX to reach the product’s target shader count. It features 18176 shading units, 568 texture mapping units, and 192 ROPs. Also included are 568 tensor cores which help improve the speed of machine learning applications. The card also has 142 raytracing acceleration cores. NVIDIA has paired 24 GB GDDR6 memory with the L40 CNX, which are connected using a 384-bit memory interface. The GPU is operating at a frequency of 1005 MHz, which can be boosted up to 2475 MHz, memory is running at 2250 MHz (18 Gbps effective).

Being a dual-slot card, the NVIDIA L40 CNX draws power from 1x 16-pin power connector, with power draw rated at 300 W maximum. Display outputs include: 1x HDMI 2.1, 3x DisplayPort 1.4a. L40 CNX is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, 111 mm in width, and features a dual-slot cooling solution.

Key Features:

- High-Performance Architecture

- CUDA Cores

-

- The L40 CNX leverages a high count of CUDA Cores (potentially in the thousands), providing parallel processing capabilities for HPC tasks, data analytics pipelines, and 3D rendering.

- Ray Tracing Cores (RT Cores)

-

- Second- or third-generation RT Cores deliver hardware-accelerated ray tracing for photorealistic lighting, shadows, and reflections, essential for professional design, VFX, and real-time visualization.

- Tensor Cores

-

- Ampere/Ada-based Tensor Cores handle mixed-precision computations (FP16, BF16, INT8), accelerating AI model training and inference, super-resolution, or AI-based image processing.

- 24GB of GDDR6 Memory

- Large VRAM Capacity

- 24GB of memory allows for handling massive 3D datasets, multi-4K video streams, and large AI model parameters without frequent memory bottlenecks.

- High-Speed Bandwidth

- GDDR6 offers robust data transfer speeds, reducing overhead when reading or writing large volumes of data in HPC or AI workloads.

- AI & HPC Optimization

- Mixed-Precision Performance

- Harnesses Tensor Cores for faster training and real-time inference at lower precision modes, improving performance while maintaining adequate accuracy.

- NVIDIA Software Stack

- Supported by CUDA-X AI libraries, HPC frameworks, containerized solutions (NGC), making it simpler to adopt the L40 CNX in HPC clusters or enterprise AI pipelines.

- Professional Visualization

- Ray-Traced Rendering

- RT Cores enable real-time path tracing for architecture, product design, or media production, drastically cutting iteration times.

- VR & AR

- Helps deliver immersive VR/AR applications with smooth frame rates and detailed graphics, useful for industrial training, design reviews, or simulation.

- Data Center–Ready Reliability

- 24/7 Operation

- Built for continuous workloads, with stable power delivery and advanced driver support, suitable for HPC labs or mission-critical enterprise setups.

- PCI Express 4.0

- Integrates into standard servers, HPC nodes, or high-end workstations; the high-bandwidth interface reduces data transfer bottlenecks.

- Scalable Multi-GPU Configurations

- Possible NVLink

- Some L40 variants may allow GPU bridging, enabling multi-GPU memory pooling or near-linear scaling in HPC or AI tasks. (Confirm official doc for L40 CNX specifics.)

- Balanced TDP

- Typically operates at ~200–300W TDP (model dependent), offering strong performance-per-watt for dense data center deployments.

Applications:

- Artificial Intelligence & Deep Learning

-

- Training & Inference: Tensor Cores accelerate neural network tasks (vision, NLP, speech), enabling faster model training and high-throughput production inference.

- Data Analytics: GPU acceleration significantly shortens big data transformations, predictive modeling, or real-time analytics in HPC or enterprise AI contexts.

- High-Performance Computing (HPC)

-

- Scientific Simulations: Suitable for large-scale simulations in physics, climate modeling, molecular dynamics, or engineering.

- Research & Academia: Universities and government labs can shorten iteration cycles on complex HPC codes.

- Media & Professional Visualization

-

- Ray-Traced Rendering: Real-time or near-real-time path tracing for design reviews, product prototyping, or cinematic-quality VFX.

- Video Production: 4K/8K multi-stream editing, color grading, and compositing benefit from GPU-accelerated timelines and effects.

- Virtualization & VDI

-

- Multi-User GPU: Partition the GPU via vGPU or containerization, enabling multiple concurrent virtual desktops for design, engineering, or content creation users.

- Cloud Gaming/Streaming: The GPU’s rendering power can also be allocated to remote or cloud-based gaming solutions.

- Edge Computing

-

- Low-Latency AI: The L40 CNX can handle advanced analytics or real-time AI inference at edge servers, minimizing round-trip latency to data centers.

- Robust HPC-lite: Deploy GPU-accelerated HPC tasks at branch offices or remote sites for local, real-time compute needs.

Why Choose the NVIDIA L40 CNX Graphics Card 24 GB?

- Strong Performance-to-Cost Ratio

-

- Delivers advanced HPC/AI performance, large VRAM, and cutting-edge ray tracing in a standard PCIe design, balancing cost-effectiveness with high-end throughput.

- 24GB Memory for Large Workloads

-

- Accommodates memory-hungry tasks like big neural networks, multi-4K editing, or data-intense HPC workloads without bottlenecks.

- Enterprise Reliability

-

- Built for extended operation, featuring driver releases, security patches, and enterprise-level support to meet professional standards in HPC labs or data centers.

- Versatile for HPC, AI, Visualization

-

- Ampere/Ada-based architecture (depending on final L40 CNX iteration) ensures synergy across HPC, deep learning, real-time rendering, and virtualization workflows.

- Scalability & Integration

-

- Standard PCI Express interface, multi-GPU bridging (if available), and comprehensive software ecosystems (CUDA, HPC libraries, Docker containers) streamline data center expansions or HPC cluster growth.

| Product Name | NVIDIA L40 CNX |

| Manufacturer | NVIDIA |

| Memory | 24GB |

| Base Clock | 1005 MHz |

| Boost Clock | 2475 MHz |

| TDP | 300 W |

| PSU | 700 W |

| Bus Interface | PCIe 4.0 x16 |

| Power Connectors | 1x 16-pin |

| Outputs | 1x HDMI 2.1,3x DisplayPort 1.4a |

| Memory Bus | 384 bit |

| Bandwidth | 864.0 GB/s |