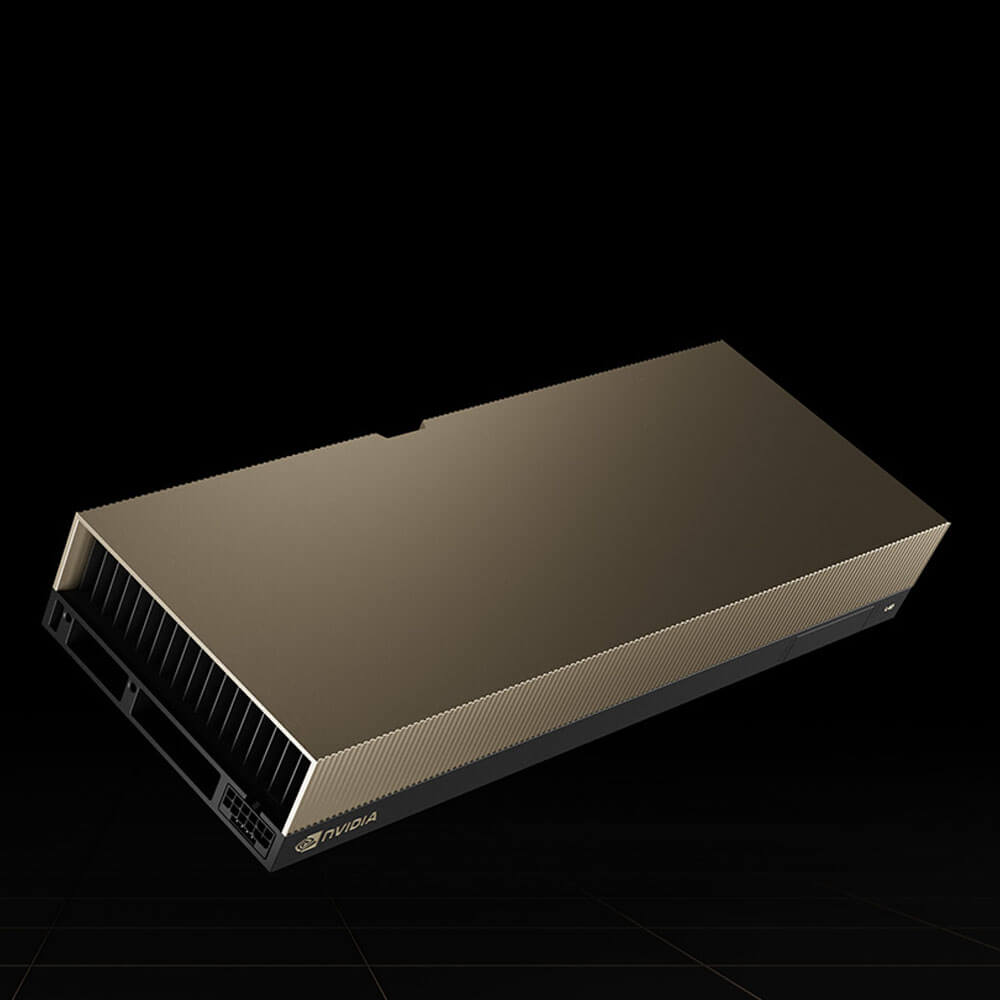

NVIDIA L40 Graphics Card 48 GB

The NVIDIA L40 Graphics Card with 48GB memory is a powerhouse for AI, graphics, and high-performance computing. Built on the NVIDIA Ada Lovelace architecture, it features advanced RT and Tensor Cores for real-time ray tracing and AI acceleration. Ideal for enterprise environments, the L40 delivers exceptional scalability, energy efficiency, and performance for demanding professional workflows and applications.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹1,450,000 ₹2,000,000

The NVIDIA L40 is a professional data center and workstation GPU designed to meet the requirements of large-scale AI, high-performance computing (HPC), and advanced visualization workflows. Equipped with 48GB of GDDR6 memory, powerful CUDA cores, advanced Ray Tracing (RT) Cores, and Tensor Cores, the L40 delivers significant throughput for computationally demanding tasks such as deep learning training/inference, scientific simulations, 3D design, and real-time rendering. Part of NVIDIA’s high-end professional GPU family, the L40 seamlessly integrates into data centers, HPC clusters, and enterprise workstations, balancing robust compute performance, energy efficiency, and enterprise-grade reliability.

Key Features:

- Ampere/Ada-Based Architecture

- High CUDA Core Count

- Delivers excellent parallel processing power essential for AI training, HPC simulations, large-scale data analytics, and professional rendering workflows.

- Second/Third-Generation RT Cores

- Real-time ray tracing hardware accelerates tasks in architecture, product design, VFX, and advanced simulation, achieving near-cinematic lighting and shadows.

- Third/Fourth-Generation Tensor Cores

- Enhances AI and machine learning performance by supporting mixed-precision computations (FP16, BF16, INT8), facilitating faster neural network training and inference.

- 48GB GDDR6 Memory

- Large-Capacity VRAM

- 48GB of GDDR6 memory handles substantial datasets, complex 3D scenes, multi-4K video, or extensive HPC data streams without encountering memory bottlenecks.

- ECC Support (Varies by Edition)

- Error-correcting code memory can preserve data integrity for critical computations, crucial in enterprise-level or scientific tasks.

- AI & HPC Optimization

- Mixed-Precision AI

- Tensor Cores accelerate AI frameworks, delivering higher throughput for training large language models (LLMs), image recognition, or real-time AI inference tasks.

- NVIDIA AI Software Stack

- Compatible with popular deep learning libraries (TensorFlow, PyTorch), HPC frameworks, and containerized solutions (NVIDIA NGC) for smooth deployment and scaling of ML workflows.

- Professional Visualization

- Real-Time Ray Tracing

- Boosts rendering pipelines in media production, architectural design, and engineering workflows, minimizing iteration times and enhancing photorealism.

- VR/AR Readiness

- Adequate GPU horsepower and memory for immersive VR/AR applications, beneficial for interactive design reviews, training, or location-based experiences.

- High-Performance Computing

- Scalable HPC

- Potentially supports multi-GPU interconnect (NVLink or equivalent) enabling large aggregated memory pools and near-linear performance scaling in HPC clusters (check official specs).

- FP64 Throughput

- Offers robust double-precision compute performance vital for precise scientific simulations, fluid dynamics, or computational chemistry codes.

- Data Center Integration & Reliability

- PCIe Gen 4.0

- Ensures fast host-to-GPU bandwidth, reducing data transfer bottlenecks in HPC or AI training tasks.

- Enterprise-Grade Lifecycle

- Enterprise drivers, validated software stacks, and security patches minimize downtime and ensure consistent performance for mission-critical workloads.

- Efficient Thermal & Power Design

- Optimized Cooling

- Typically deployed in passively cooled or minimal fan designs for data center servers or HPC racks, enabling stable 24/7 operation.

- Performance-per-Watt Gains

- Delivers high computational throughput while maintaining moderate TDP levels, lowering overall operating costs in large-scale installations.

Applications:

- AI & Machine Learning

-

- Model Training: Accelerates deep learning tasks for massive neural networks, including computer vision, NLP, and recommendation systems.

- Inference: Delivers real-time predictions for high-traffic or mission-critical AI services, supporting advanced batch and streaming inference.

- High-Performance Computing (HPC)

-

- Scientific Simulations: HPC codes in fields like climate modeling, quantum chemistry, and astrophysics gain acceleration through CUDA core parallelism and high VRAM.

- Research Labs: Government, academia, or private R&D facilities expedite scientific discovery by handling multi-physics simulations, large parametric sweeps, or iterative HPC workloads.

- Professional Visualization & Rendering

-

- Ray-Traced Rendering: Achieves near-real-time path tracing for product design, architectural walk-throughs, or cinematic-quality VFX, significantly reducing iteration times.

- Media & Entertainment: 4K/8K editing, GPU-accelerated color grading, and effect compositing are streamlined with 48GB VRAM.

- Data Analytics & Big Data

-

- GPU-Accelerated Queries: Tools like RAPIDS harness GPU parallelism to accelerate SQL and data transformations, enabling real-time analytics at scale.

- ETL & Real-Time Processing: GPU-based data pipelines can handle high-volume streaming data or rapidly update dashboards and models.

- Virtualization & Multi-User Scenarios

-

- vGPU Solutions: Partition the L40’s resources among multiple virtual desktops or containers, supporting professionals who need GPU-accelerated compute or 3D visuals.

- Remote Work Environments: Delivers high-fidelity user experiences for designers, engineers, or data scientists connecting from remote endpoints.

Why Choose the NVIDIA L40 Graphics Card 48 GB?

- Exceptional Performance & Memory

-

- 48GB of GDDR6 memory and advanced GPU cores handle the largest HPC datasets, AI models, or 3D rendering tasks without hitting memory limits.

- Adaptable for AI, HPC, and 3D Workflows

-

- Unifies HPC compute, deep learning acceleration, and real-time ray tracing in a single GPU, enabling multi-purpose data center or advanced workstation usage.

- Scalable Data Center Integration

-

- Standard PCIe form factor plus multi-GPU bridging (potential NVLink) let HPC clusters or enterprise servers scale horizontally with minimal friction.

- Enterprise Reliability & Ecosystem

-

- Certified drivers, ECC memory support, and integration with NVIDIA’s HPC/AI software stack ensure stable, round-the-clock operations and simpler software maintenance.

- Future-Proof Architecture

-

- Harnesses the latest Ampere/Ada-based enhancements (model dependent), ensuring readiness for next-generation HPC, AI frameworks, and real-time graphical advances.

| Product Name | NVIDIA L40 |

| Manufacturer | NVIDIA |

| Memory | 48 GB GDDR6 |

| Memory Bus | 384 bit |

| Bandwidth | 864.0 GB/s |

| Base Clock | 735 MHz |

| Boost Clock | 2490 MHz |

| TDP | 300 W |

| PSU | 700 W |

| Outputs | 4x DisplayPort 1.4a |

| Power Connectors | 1x 16-pin |

| Maximum Resolution | 7680 x 4320 (8K) |

| PCIe Interface | PCIe 4.0 x16 |