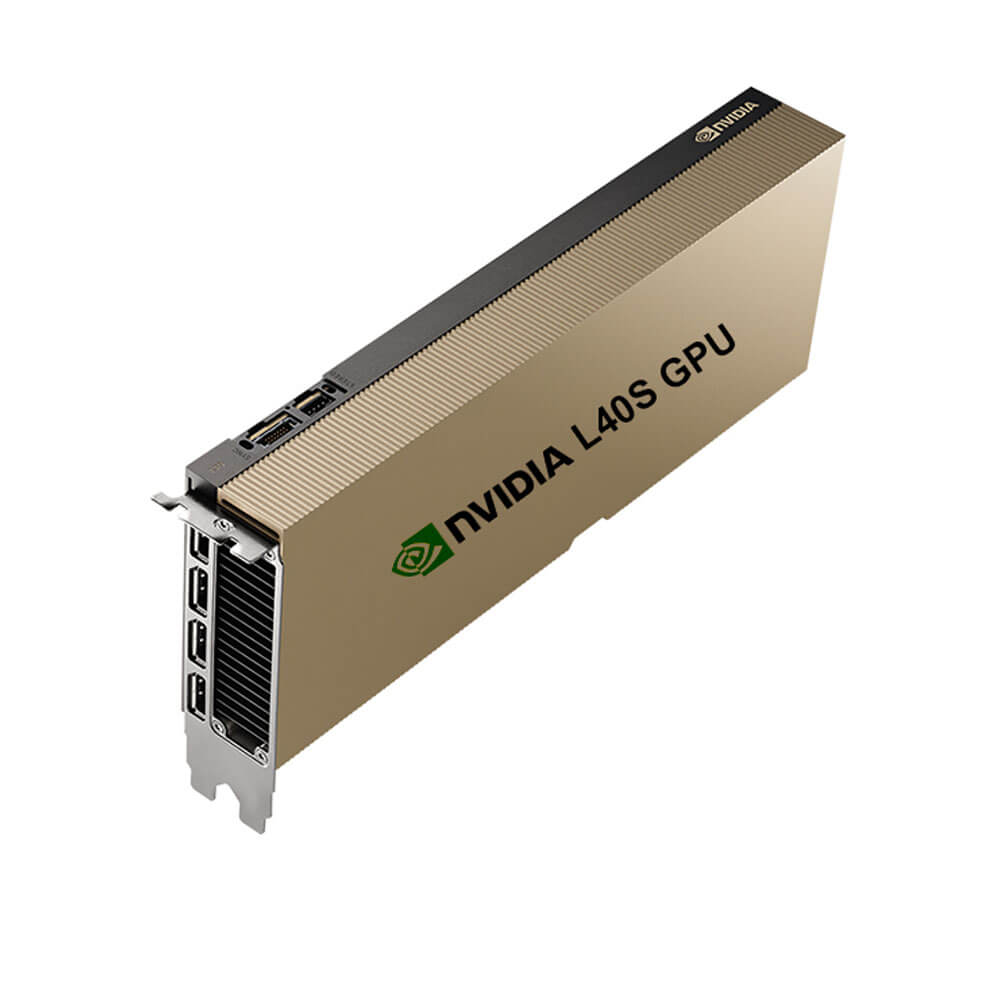

NVIDIA L40S Graphics Card 48GB

The NVIDIA L40S Graphics Card with 48GB memory is a high-performance solution for AI, graphics rendering, and data-intensive workloads. Built on the NVIDIA Ada Lovelace architecture, it features advanced RT and Tensor Cores for real-time ray tracing and AI acceleration. Ideal for enterprise and professional applications, the L40S delivers exceptional scalability, efficiency, and reliability for demanding workflows.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹770,000 ₹2,000,000

The L40S is a professional graphics card by NVIDIA, launched on October 13th, 2022. Built on the 5 nm process, and based on the AD102 graphics processor, in its AD102-???-A1 variant, the card supports DirectX 12 Ultimate. The AD102 graphics processor is a large chip with a die area of 609 mm² and 76,300 million transistors. Unlike the fully unlocked TITAN Ada, which uses the same GPU but has all 18432 shaders enabled, NVIDIA has disabled some shading units on the L40S to reach the product’s target shader count. It features 18176 shading units, 568 texture mapping units, and 192 ROPs. Also included are 568 tensor cores which help improve the speed of machine learning applications. The card also has 142 raytracing acceleration cores. NVIDIA has paired 48 GB GDDR6 memory with the L40S, which are connected using a 384-bit memory interface. The GPU is operating at a frequency of 1110 MHz, which can be boosted up to 2520 MHz, memory is running at 2250 MHz (18 Gbps effective).

Being a dual-slot card, the NVIDIA L40S draws power from 1x 16-pin power connector, with power draw rated at 300 W maximum. Display outputs include: 1x HDMI 2.1, 3x DisplayPort 1.4a. L40S is connected to the rest of the system using a PCI-Express 4.0 x16 interface. The card measures 267 mm in length, 111 mm in width, and features a dual-slot cooling solution.

Key Features:

- Ada Lovelace Architecture

- Advanced CUDA Cores

- The L40S leverages the latest Ada-based CUDA Cores, offering dramatic performance improvements for parallel computations in HPC, AI, and real-time rendering.

- Fourth-Generation Tensor Cores

- Delivers enhanced AI capabilities, accelerating both training and inference with mixed-precision modes (FP16, BF16, INT8, FP8), enabling faster deep learning workloads.

- Third/Fourth-Generation RT Cores

- Elevates real-time ray tracing performance, allowing near-cinematic lighting, reflections, and shadows in professional visualization tasks.

- 48GB High-Capacity Memory

- GDDR6 (ECC)

- The 48GB of memory ensures ample room for large datasets, extensive 3D assets, multi-4K video streams, or substantial AI models, reducing memory bottlenecks in data-driven tasks.

- Wide Memory Interface & Bandwidth

- Delivers substantial memory bandwidth (several hundred GB/s), facilitating quick data transfers between GPU cores and VRAM.

- AI & HPC Acceleration

- Mixed-Precision Support

- Tensor Cores handle FP16, BF16, TF32, INT8, FP8 computations, delivering higher throughput for AI training and inference with minimal loss in accuracy.

- NVIDIA AI Software Ecosystem

- Integrates seamlessly with CUDA-X AI libraries, HPC frameworks, and containerized solutions (NVIDIA NGC), simplifying development and deployment for HPC/AI clusters.

- Professional Visualization & Rendering

- Real-Time Ray Tracing

- Accelerates path tracing for photorealistic rendering, architectural walkthroughs, and advanced design simulations, reducing iteration cycles for media/entertainment or product design.

- Advanced VR/AR Workflows

- Supports immersive experiences for engineering, medical training, and location-based entertainment with low latency and high-fidelity visuals.

- Data Center–Ready Reliability

- 24/7 Mission-Critical Operation

- Engineered to run continuously under heavy load, essential for HPC labs, enterprise data centers, or large-scale AI services in production.

- Enterprise-Class Drivers & Management

- Features stable, long-lifecycle driver branches, robust security patches, and enterprise support for minimal downtime in mission-critical applications.

- Scalable Deployment

- PCIe 4.0 Interface

- Offers high-bandwidth communication with the host CPU, reducing data transfer bottlenecks in HPC or AI inference/training tasks.

- NVLink or Multi-GPU Potential

- Some Ada-based GPUs provide high-speed GPU-to-GPU interconnect (NVLink), allowing memory pooling and near-linear scaling in HPC or AI clusters. (Check official L40S product docs for specifics.)

- Optimized Power & Thermal

- Efficient Architecture

- The L40S typically operates within a moderate TDP for a card of its high performance class, balancing power draw and heat dissipation in data centers or rack-based HPC nodes.

- Cooling Solutions

- Often passively cooled (or minimal active cooling) for server deployments with well-designed airflow, ensuring stable operation under persistent high load.

Applications:

- Artificial Intelligence & Deep Learning

-

- Massive Model Training: Large neural networks in natural language processing, computer vision, or speech recognition train significantly faster, thanks to Tensor Core acceleration.

- Inference at Scale: Deploy complex AI models in real time, handling thousands of requests concurrently with low latency—vital for recommendation systems or real-time analytics.

- High-Performance Computing (HPC)

-

- Scientific Simulations: HPC tasks in areas like climate research, molecular dynamics, or astrophysics benefit from the parallel CUDA Cores and strong double-precision performance.

- Research & Development: Universities, government, and private labs can accelerate code testing, reduce iteration times, and push more complex scenarios in modeling.

- Professional Visualization & Media Production

-

- Ray-Traced Rendering: Achieve near-real-time previews of cinematic scenes, product designs, or architectural models with physically accurate lighting.

- Multi-4K Video Editing: Large memory and compute ensure smooth editing, color grading, and compositing at high resolutions for advanced content creation pipelines.

- Enterprise Virtualization

-

- Multi-User GPU: GPU partitioning (vGPU) can serve multiple virtual desktops or container-based workloads, each benefiting from hardware-accelerated rendering or AI processes.

- Cloud Services: HPC or AI frameworks in containerized setups can deploy L40S GPU resources on demand, scaling up or down as needed.

- Edge & Hybrid Cloud Deployments

-

- Local AI Inference: For organizations needing powerful on-prem GPU resources to reduce data center or cloud latency and costs.

- Hybrid HPC: Bridges on-prem HPC clusters with remote or cloud-based HPC, effectively distributing computational tasks among flexible GPU resources.

Why Choose the NVIDIA L40S Graphics Card 48GB?

- Massive 48GB Memory

-

- Provides substantial VRAM to handle complex AI models, multi-gigabyte HPC datasets, or advanced 3D scenes without memory exhaustion issues.

- Ada Architecture Excellence

-

- Harnesses next-gen CUDA, Tensor, and RT cores for top-tier performance in HPC, AI, and professional visualization, bridging efficiency with advanced feature sets.

- Enterprise Stability & Security

-

- Supported by NVIDIA’s enterprise drivers, longer lifecycle updates, and tested reliability that ensures minimal downtime for mission-critical or large-scale operations.

- Scalable and Flexible

-

- Standard PCIe form factor and potential NVLink bridging allow straightforward expansion within HPC data centers, cluster computing, or multi-GPU workstations.

- Future-Proofing

-

- Ada-based enhancements in AI, ray tracing, and HPC performance keep the L40S relevant for evolving workloads, new rendering pipelines, and next-gen scientific research.

| Product Name | NVIDIA L40S |

| Manufacturer | NVIDIA |

| Memory | 48 GB GDDR6 |

| Base Clock | 1110 MHz |

| Boost Clock | 2520 MHz |

| TDP | 300 W |

| PSU | 700W |

| Memory Bus Width | 384-bit |

| Memory Bandwidth | 1.15 TB/s |

| Power Connectors | 1x 16-pin |

| Outputs | 1x HDMI 2.1 3x DisplayPort 1.4a |

| Bus Interface | PCIe 4.0 x16 |