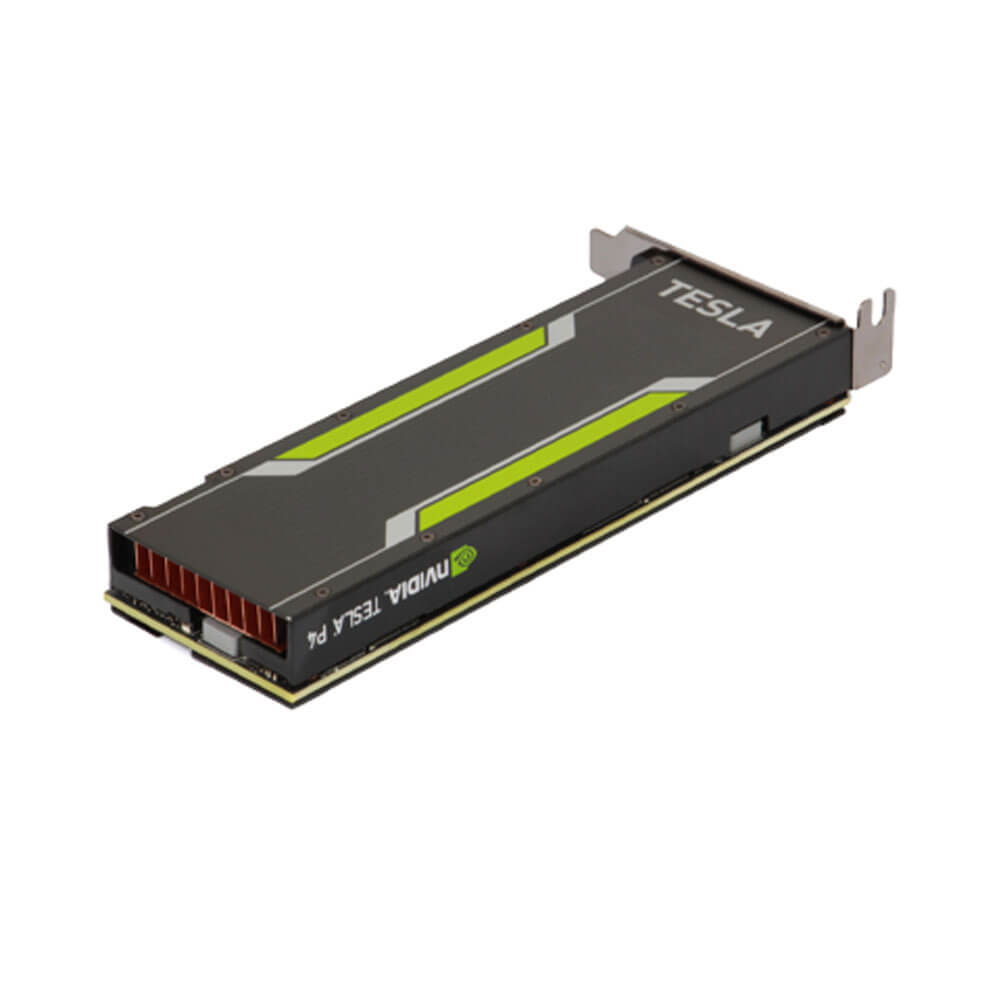

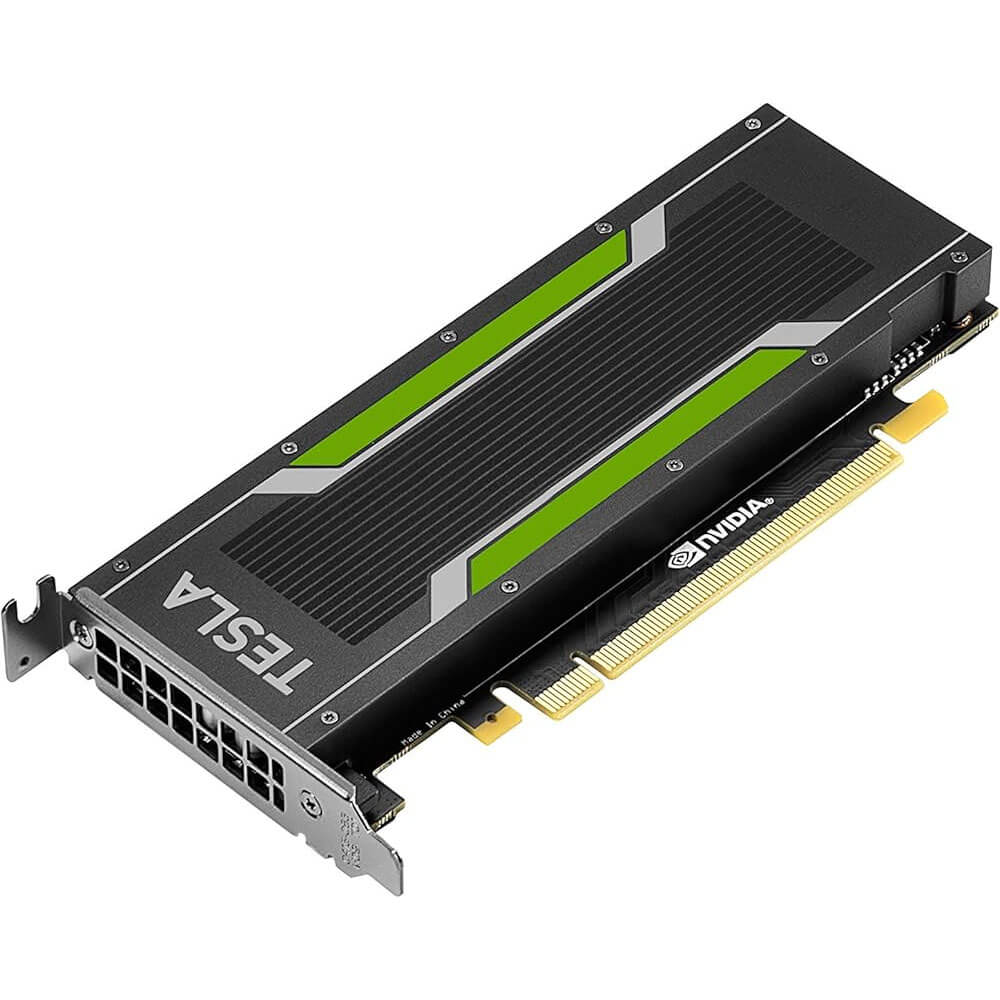

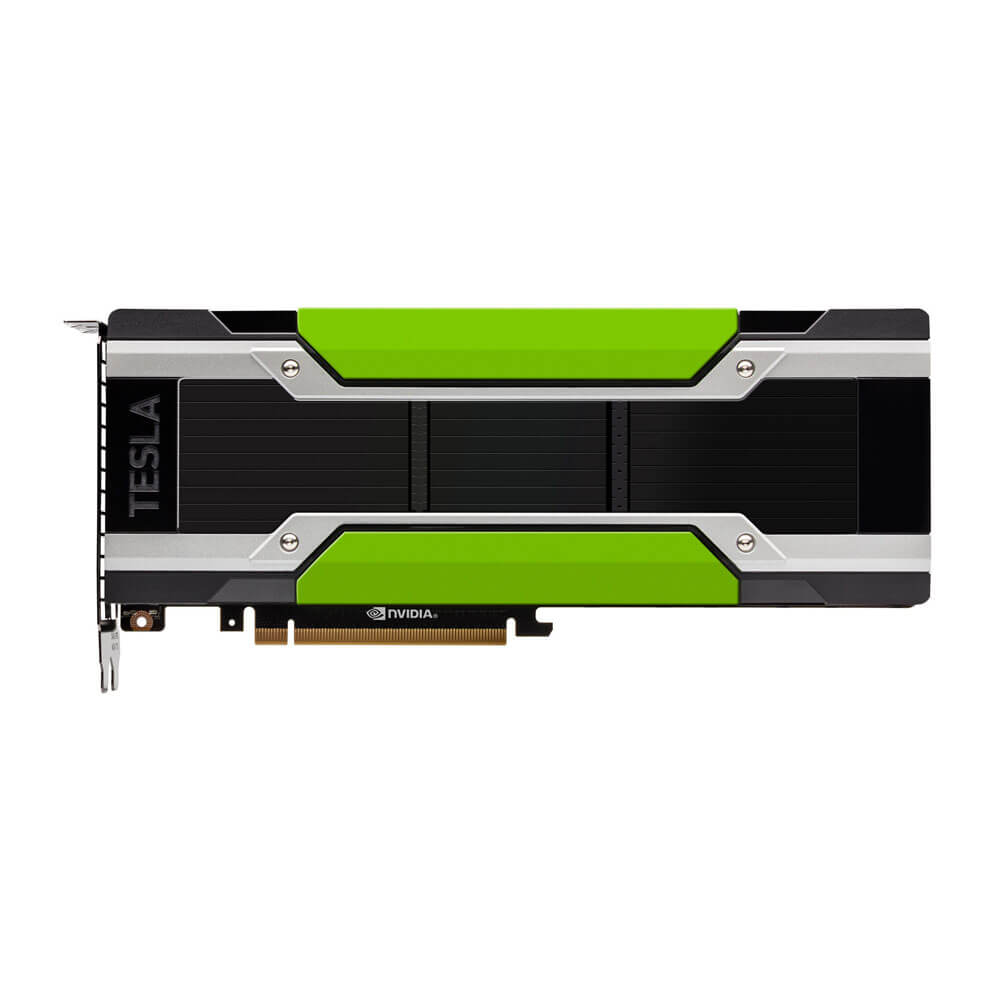

NVIDIA Tesla P4 Graphics Card 8GB

The NVIDIA Tesla P4 Graphics Card with 8GB GDDR5 memory is designed for AI inference and deep learning in data centers. Powered by the NVIDIA Pascal architecture, it delivers exceptional performance with energy efficiency in a compact form factor. Ideal for video processing, AI workloads, and virtualized environments, the Tesla P4 ensures reliable, scalable, and efficient operation for demanding applications.

Min. Quantity – 5 Nos

Note: Below are the approximate and promotional prices. For the latest pricing and further details, please WhatsApp or call us at +91-8903657999.

₹25,999 ₹34,000

The NVIDIA Tesla P4 Graphics Card 8GB is a powerful GPU designed to accelerate inferencing tasks in AI and deep learning applications. Built on the Pascal architecture, it provides exceptional performance while maintaining energy efficiency. With its compact single-slot form factor and low power consumption, the Tesla P4 is ideal for data centers, AI inferencing at the edge, and high-density server environments.

Designed for professionals and enterprises working on AI workloads, video transcoding, and high-performance computing (HPC), the Tesla P4 delivers real-time inferencing, reduced latency, and efficient scaling for demanding applications.

Key Features:

- Pascal Architecture

- CUDA Cores

- Equipped with 2,560 CUDA cores, the Tesla P4 delivers powerful parallel processing for AI inferencing and HPC workloads.

- Optimized for Inferencing

- Pascal architecture ensures superior performance in deep learning inferencing tasks with optimized precision.

- 8GB GDDR5 Memory

- Sufficient Capacity

- The 8GB of GDDR5 memory ensures smooth processing for inferencing workloads and high-resolution data.

- High Bandwidth

- Provides a memory bandwidth of 192 GB/s, enabling rapid data transfer for real-time applications.

- Energy Efficiency

- Low Power Consumption

- Operates at a power consumption of 50W, making it ideal for high-density deployments in energy-constrained environments.

- Efficient Performance

- Offers an excellent performance-per-watt ratio for cost-effective AI deployment.

- Deep Learning Inferencing

- FP16 and INT8 Optimization

- Optimized for mixed-precision inferencing tasks, with a peak INT8 performance of 22 TOPS for AI models.

- Low Latency

- Delivers real-time responses in applications such as natural language processing, image recognition, and recommendation systems.

- Compact Design

- Single-Slot Form Factor

- Fits into standard servers and edge devices with space constraints.

- Passive Cooling

- Designed for data center environments, leveraging server airflow for efficient heat dissipation.

- Multi-Stream Video Transcoding

- Video Encoding and Decoding

- Includes hardware-accelerated H.264 and HEVC encoding/decoding capabilities, making it ideal for video streaming and transcoding.

- High-Density Streaming

- Supports up to 38 streams of 720p 30 FPS video

Applications:

- Artificial Intelligence and Deep Learning

-

- Inferencing Workloads: Speeds up inferencing for AI models such as image classification, speech recognition, and recommendation engines.

- Natural Language Processing (NLP): Optimized for real-time applications like chatbots, sentiment analysis, and translation.

- Edge AI: Ideal for deploying AI models at the edge, supporting real-time decision-making in IoT and smart devices.

- Video Transcoding and Streaming

-

- Content Delivery: Enables high-quality video streaming with low latency for platforms like OTT and live-streaming services.

- High-Density Transcoding: Supports multiple concurrent video streams, reducing infrastructure costs for video service providers.

- High-Performance Computing (HPC)

-

- Scientific Research: Accelerates computations in physics, genomics, and simulations.

- Data Analytics: Handles complex datasets for predictive analytics and real-time data processing.

- Data Centers

-

- AI Workloads: Powers inferencing tasks in data centers with high efficiency and scalability.

- Multi-Tenant Environments: Allows for efficient resource allocation in shared environments.

Why Choose the NVIDIA Tesla P4 Graphics Card 8GB?

- Optimized for AI Inferencing

- Delivers outstanding performance for AI workloads, including natural language processing, image recognition, and recommendation systems.

- Energy-Efficient Design

- Operates at just 50W, making it ideal for energy-constrained environments like data centers and edge deployments.

- Compact and Versatile

- The single-slot form factor fits into a variety of server configurations, enabling high-density deployments.

- Real-Time Video Transcoding

- Hardware-accelerated video processing ensures smooth and efficient streaming for multiple concurrent users.

- Scalability

- Supports large-scale deployments with high efficiency, making it suitable for enterprises and cloud providers.

- Future-Ready for AI Applications

- Built for current and next-generation AI applications, ensuring long-term usability and relevance.

| Product Name | NVIDIA Tesla P4 |

| Manufacturer | NVIDIA |

| Memory | 8 GB GDDR5 |

| Power Connectors | 1x 6-pin PCIe |

| Bandwidth | 192 GB/s |

| Base Clock | 1303 MHz |

| Boost Clock | 1531 MHz |

| TDP | 75 W |

| Dimensions | 6.6″ x 2.4″ (167.6mm x 61.0mm) |

| Display Connectors | None (Headless/Compute use) |

| Interface | PCI Express 3.0 x16 |